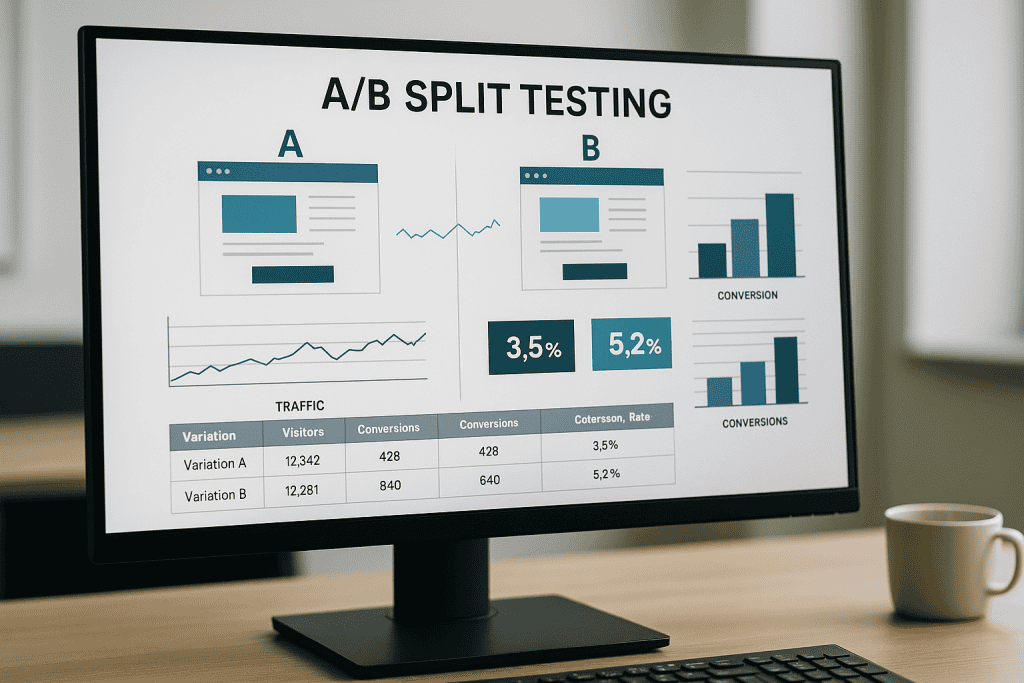

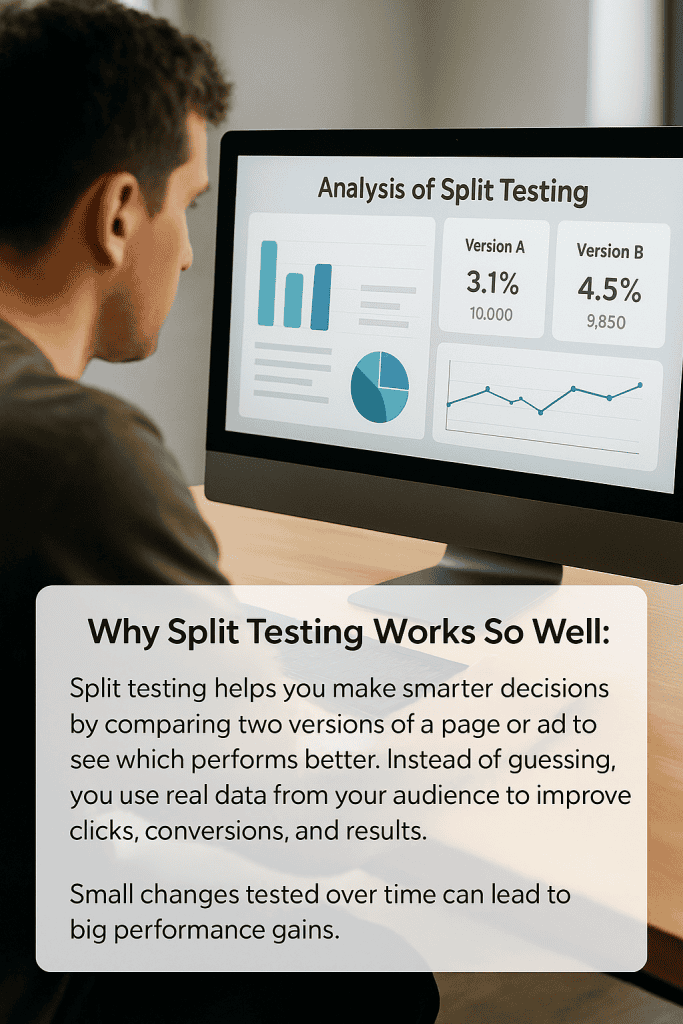

A/B testing is a practical way for businesses to figure out what actually works. You compare two versions of a webpage, email, or app and see which one gets more clicks, sales, or sign-ups.

A/B tests put two versions of a single webpage head-to-head to find out which one wins with your audience. You get rid of the guessing and see the real data on what people like.

A lot of companies still make decisions based on gut feelings or what sounds good in a meeting. A/B testing switches things up by sending traffic to different versions and tracking what users actually do.

This process makes it clear which changes really help and which are just wasting your time.

If you want to run solid A/B tests, you need to plan, set things up carefully, and actually look at the numbers. This guide breaks down the basics and some more advanced stuff too, so you can avoid rookie mistakes and actually get useful results.

It doesn’t matter if you’re a huge company or just getting started—these methods can help you make smarter decisions and boost your conversion rates.

What is AB Testing?

AB testing is pretty simple at its core. You put two versions of a webpage, email, or app in front of people and see which one performs better.

You use real data from actual users instead of just making guesses.

Definition of AB Testing

With AB testing, you split your traffic between two different versions of the same thing. Think of it as running a little experiment to see what people actually do when they land on your page.

Half your visitors get version A, and the other half see version B. You track which one hits your goal more often.

Version A is your control, the current setup. Version B is the new version with one change you want to try.

The system randomly puts people into each group. This keeps the results fair and honest.

Purpose of AB Testing

The whole point of AB testing is to make things better using what real users actually do. You can boost conversions by trying out different ideas and seeing what sticks.

Companies run AB tests to:

- Increase sales by tweaking product pages

- Improve email open rates by changing subject lines

- Lower bounce rates by playing with page layouts

- Get more sign-ups by testing different forms

You don’t have to guess if something will work. The data tells you.

You’ll see clearly which version actually does better. That’s how you make smarter business moves.

How AB Testing Differs from Other Experiments

AB testing stands out from other methods. Instead of asking people what they think, you measure what they do.

Multivariate testing messes with a bunch of things at once. AB testing is more focused—you just change one thing, so it’s easier to figure out what made the difference.

User testing means watching people use your site, maybe in a lab. AB testing just tracks what thousands of real visitors do on their own.

Analytics reviews look at what happened before. AB testing lets you see what happens when you make specific tweaks.

The big thing is that AB testing uses real, randomised control trials with your real users. That makes the results way more trustworthy.

AB Testing Process Overview

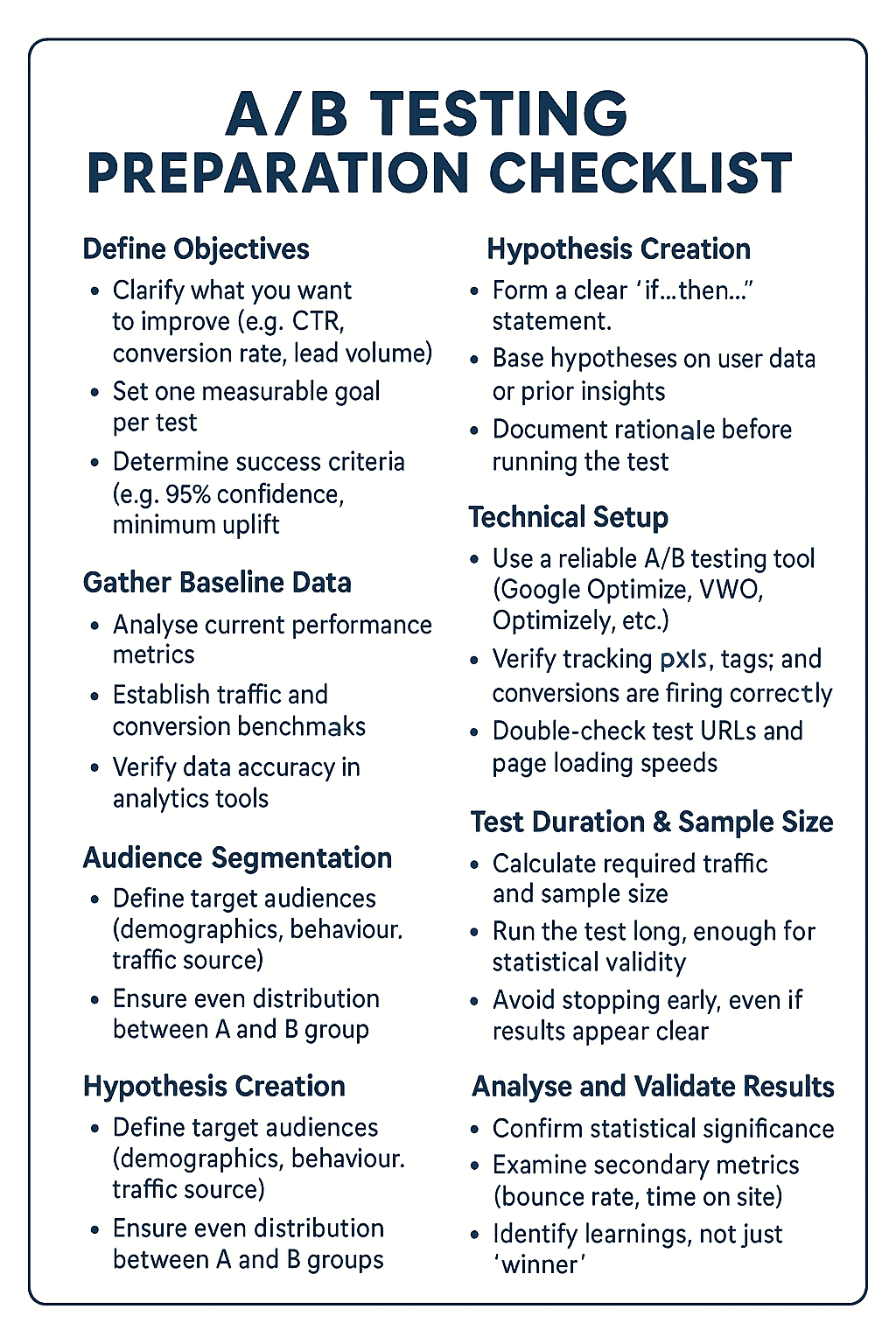

If you want your A/B test to work, you’ve got to nail three things before you even start. Set a clear goal, make a testable prediction, and pick the right numbers to track.

Setting Clear Objectives

Every A/B test needs a real business goal. Don’t try to do everything at once—pick one thing you want to improve.

Some good A/B testing goals:

- Raise conversion rates by a certain percent

- Lower bounce rates on landing pages

- Get more people to open or click your emails

- Boost engagement, like time on page

Tie your goal straight to something that matters. “Increase newsletter sign-ups by 15%” is way better than “make the site more engaging.”

Small wins on pages that get tons of traffic can make a bigger difference than huge changes on pages nobody visits.

Set a reasonable timeline. Most tests need anywhere from one to four weeks, depending on your traffic and how big a change you’re expecting.

Formulating Hypotheses

A good hypothesis says what you think will happen and why. This helps you set up your test and figure out what the results mean.

Try this format: “If we change [something], then [this will happen] because [reason based on user behaviour].”

Examples:

- “If we switch the button colour from blue to red, clicks will go up 10% because red feels more urgent.”

- “If we cut the checkout form from five fields to three, conversions will rise by 8% because people hate long forms.”

Back up your reasoning with research, analytics, or basic design logic.

Don’t test a bunch of changes at once. Stick to one clear change per test so you know what’s working.

Identifying Key Metrics

Choosing the right numbers to track is everything. You want one main metric and a few backups to get the full picture.

The main metric should match your goal. If you want more sales, track conversion rate or revenue per visitor.

Secondary metrics can show the bigger story:

- Engagement (time on page, pages per visit)

- Quality (bounce rate, return visits)

- Long-term stuff (customer value, retention)

Check your baseline numbers before you start. That way, you know what “normal” looks like and how much you need to move the needle.

A/B testing calculators can tell you how much traffic you’ll need to see real results.

Skip the vanity metrics. More page views don’t mean much if nobody’s buying.

Preparing for an A/B Test

Getting ready for an A/B test can make or break your results. It’s all about picking the right thing to test, knowing who’s in your audience, and grabbing those baseline numbers before you kick things off.

Selecting Elements to Test

You want to test stuff that actually affects your main goals. High-traffic spots like homepages, product pages, and checkout flows are usually best.

Stuff worth testing:

- Headlines and titles

- Call-to-action buttons (text, colour, size)

- Images or videos

- Form fields and layouts

- Navigation menus

- How you show prices

Big changes usually give you clearer results than tiny tweaks. A full page redesign can show a bigger difference than just changing a button colour. If you want a solid A/B test, stick to one change at a time so you don’t get mixed up.

Start with what grabs users’ attention. People usually look at headlines first, then images, then buttons. Focus on those before you mess with the small stuff.

Use feedback and analytics to pick what to test. If people complain about checkout, test a new process. If product pages have high bounce rates, try a new layout.

Segmenting Your Audience

Not all users act the same way, so you’ve got to break them into groups. Newcomers don’t behave like regulars. Mobile users have different habits than desktop folks.

Ways to segment:

- Device: Mobile, desktop, tablet

- Traffic source: Search, ads, social

- User type: First-timers, return visitors, frequent buyers

- Demographics: Age, location, language

Make sure your segments are big enough to matter. Each one should have at least 100 conversions per version, or you’ll just end up guessing.

Check your analytics to spot which segments are most important. Focus your tests where they’ll have the biggest impact.

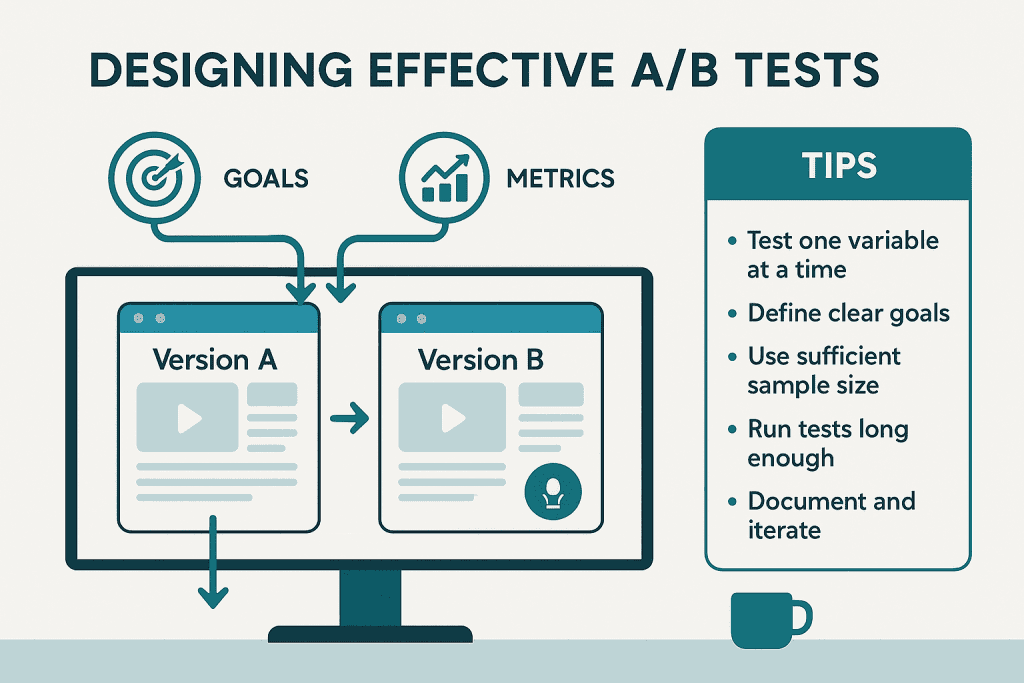

Designing Effective A/B Tests

You need to design your test with clear variants, random user assignment, and as little bias as possible. These steps make sure your results actually mean something.

Creating Variants

Good variants change just one thing at a time. That way, you know exactly what caused the difference.

The control is your current version. The variant (or treatment) is the same except for one change.

Examples of strong variants:

- Button colour: Blue vs. Red

- Headline: “Save Money” vs. “Cut Costs”

- Image placement: Top vs. Bottom

Not-so-great variants:

- Changing button colour and headline at the same time

- Tweaking layout and copy together

- Messing with multiple elements at once

You should design each variant with a hypothesis in mind. Ask yourself what you want to learn about your users.

Make the change obvious enough that people will notice. Tiny tweaks, like a one-pixel font bump, probably won’t move the needle.

Randomisation Techniques

Simple randomisation just assigns people to versions at random. This works if you’ve got at least 1,000 users per version.

Stratified randomisation splits users into groups (like by device or location) and then randomises within each group.

| Method | Best For | Sample Size |

|---|---|---|

| Simple | Big audiences | 1,000+ per version |

| Stratified | Mixed user types | 500+ per group |

| Block | Step-by-step testing | 100+ per block |

Keep users in the same version for the whole test. That way, you don’t confuse anyone and your data stays clean.

Cookies work for tracking returning users. If people log in, user IDs are even better.

Randomise before anyone sees the test. That keeps things fair and avoids bias.

Avoiding Common Biases

Selection bias creeps in if certain users end up in one version more often. Random assignment and equal traffic help dodge this.

Seasonal bias pops up if you run tests during holidays or big events. Try to test during regular times for the best results.

Sample size bias happens if you stop the test too soon or don’t have enough users. Figure out your needed sample size before you start.

Novelty bias means people might like something just because it’s new. Usually, this wears off after a week or two.

Confirmation bias can make you see what you want in the results. Decide what success looks like before you peek at the numbers.

Time-of-day bias can mess things up if one version gets more daytime traffic. Make sure both versions get a fair shot all day long.

You should jot down possible sources of bias when you plan your test. Regular checks can catch problems before they mess things up.

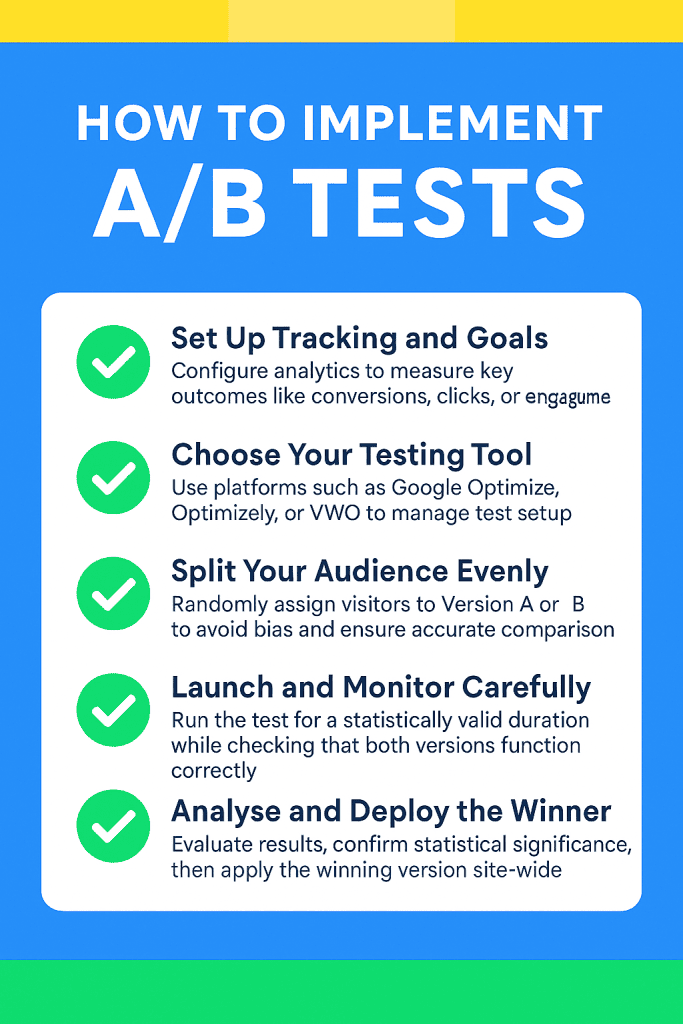

Implementing A/B Tests

Getting your AB test live means picking the right tools, setting things up right, and making sure your numbers are legit. If you want your test to actually tell you something, you’ve got to track data accurately and measure results you can trust.

Choosing AB Testing Tools

Picking an AB testing platform really shapes how easily your team can set up, launch, and analyze experiments. Some names you’ll hear a lot: Google Optimize, Optimizely, VWO, and Adobe Target—they’re all solid for website testing.

Free vs Paid Options:

- Google Optimize: Free basics, works nicely with Google Analytics

- Optimizely: Lots of targeting and personalization features

- VWO: Has built-in heatmaps and session recordings

- Adobe Target: Aimed at enterprises, offers AI-backed testing

Think about your monthly traffic when you choose. Most tools price by how many visitors you include in tests. If you get under 10,000 visitors a month, you’ll probably want to start with something free.

A good visual editor helps a lot. Marketers can change pages without touching code. If you’re more technical, you’ll want support for custom JavaScript and CSS tweaks.

Integrations really matter too. Make sure your tool connects with your analytics, CRM, or marketing automation platforms. Smooth data flow saves a lot of headaches later.

Technical Setup and Integration

Setting things up right keeps your data accurate and your results reliable. Usually, you just need to add a JavaScript snippet to your site’s header for most AB testing tools.

Installation Steps:

- Drop the tracking code on every page

- Set conversion goals and metrics

- Define audience segments for targeting

- Link up your analytics platform

- Test everything in preview mode

Heavy scripts can slow your site down, which annoys users. Try to pick tools with lightweight or async code.

If you’ve got multiple websites or subdomains, cross-domain tracking becomes a must. Set up your tool to follow users across all your domains for a full picture.

Testing on mobile? Make sure your tool handles responsive designs and can track mobile-specific events accurately.

Ensuring Test Reliability

You want your results to be real, not random. Most folks suggest running tests until you hit 95% confidence with enough data.

Key Reliability Factors:

- Sample Size: Figure out how many visitors you need before you start

- Test Duration: Run tests for at least 1–2 weeks, or a full business cycle

- Traffic Allocation: Split traffic evenly between test versions

- External Factors: Watch out for seasonality, promos, or site updates

Try not to peek at results too soon. Data-driven decisions need patience to reach significance.

Always run quality checks to avoid technical slip-ups. Check that each variation looks right on different devices and browsers.

Keep a record of test details like start date, audience, and goals. This helps you remember what you did and avoid rerunning failed ideas.

Check in on your tests daily for any tech hiccups, but don’t jump to conclusions about winners too early.

Analysing AB Test Results

You’ll want to track the basics: conversion rates, click-throughs, revenue per visitor. Statistical significance tells you if the differences between versions are actually meaningful, but you also need to think about whether those differences matter in the real world.

Collecting and Interpreting Data

First step: collect solid data from both versions for the whole test period. Marketers should track main metrics like conversions, plus things like bounce rates and time on page.

Keep your tracking consistent across all versions. Use the same methods, timeframes, and user groups. If something’s off, your results might not mean much.

Key metrics to watch:

- Primary metrics (conversions, sales, sign-ups)

- Engagement metrics (time on page, scroll depth)

- Technical stuff (load times, error rates)

Raw data needs a closer look before you decide anything. Analysing AB testing results means checking both the actual numbers and the percentage changes.

Watch for patterns in user behaviour beyond just conversions. A higher conversion rate isn’t great if your average order value drops or customer lifetime value takes a hit.

Statistical Significance in AB Testing

Statistical significance tells you if your results are real or just random. Most AB tests aim for 95% confidence—so only a 5% chance it’s a fluke.

Sample size matters a ton. You need enough people in your test to spot real differences. Small tests often give you unreliable answers.

Understanding statistical significance keeps you from making calls on half-baked data. Run your tests until you hit both your sample size and confidence targets.

What you need for significance:

- Calculate sample size before you start

- Set confidence levels (usually 95% or 99%)

- Look for p-values under 0.05 if you want 95% confidence

- Let tests run the full planned duration

Statistical calculators can help you know when you’ve hit significance. Just remember: significance doesn’t always mean it’s worth changing your business.

Drawing Meaningful Conclusions

Statistical significance is just the start. You also need to check if the improvement really matters for your business.

Think about the size of the change and what it costs to implement. A tiny 0.1% gain might not be worth the effort, but a 15% jump is a no-brainer.

Good analysis of AB testing results digs into how different segments respond. Age, device, location, and where your traffic comes from can all affect results.

What affects your conclusions:

- How big the effect is and if it matters for your goals

- Whether the result is consistent across user groups

- Any outside factors during the test

- Long-term impact, not just short-term wins

Write down everything you learn, even if a test flops or surprises you. Failed tests still teach you what not to do next time.

Let your test results guide your bigger strategy. Winning versions should become your new baseline, and you can keep improving from there.

Best Practices for AB Testing

Testing at the right pace keeps your data clean and your results meaningful. Don’t rush to test everything at once or you’ll end up with messy data.

Testing Frequency

Run AB tests for at least one or two business cycles before making any calls. This helps even out weird weekly or seasonal patterns.

The best teams test thoughtfully instead of cranking out tons of experiments. Testing too often can cause:

- Sample pollution: Users see mixed-up experiences

- Statistical errors: Not enough time to hit significance

- Resource strain: Your team gets stretched too thin

Suggested test timing:

- Small tweaks: At least 1–2 weeks

- Big redesigns: 3–4 weeks minimum

- Seasonal stuff: Run through the whole season

Wait for one test to reach significance before starting another on the same page element. This keeps your data clean.

Managing Multiple Tests

You can run tests on different parts of your site at the same time if they don’t overlap. Just make sure you’re not testing the same element or user group in more than one experiment.

Safe combos:

- Homepage banner + checkout button

- Email subject lines + landing page copy

- Mobile app + desktop site

Don’t test together:

- Two headlines on the same page

- Overlapping user segments

- Steps in the same conversion funnel

Keep a testing calendar to track what’s running when. This avoids conflicts and helps with planning. Track which users saw which versions to keep your data solid.

Split your traffic evenly between control and variant. Most tools do this automatically, but double-check to be sure.

Iterating on AB Test Findings

When you find a winner, roll it out everywhere and start planning the next test.

After the test:

- Launch the winning version across your site

- Dig into why it worked

- Come up with new ideas for the next round

- Test other elements on the same page

Write down what you learn from every test. Even failed ones are useful—they tell you what your audience doesn’t care about. AB testing is all about learning from both wins and losses.

Build on your results with small tweaks. If a red button beats a blue one, try different shades of red or change the size next time.

Keep a running log of all your test outcomes. That way, you don’t repeat old mistakes and your team gets smarter over time.

Common Challenges in AB Testing

Even experienced teams run into big roadblocks with AB testing. The usual culprits? Not enough participants, not running tests long enough, or just getting weird results that don’t make sense.

Sample Size Issues

Tons of teams deal with sample size headaches. If your test groups are too small, you just can’t spot real differences.

Minimum sample needs depend on the lift you expect. Want a 5% bump in conversions? You’ll need around 3,000 visitors per version. Smaller changes need even more people.

Low traffic can drag tests out for months. If your pages don’t get many visits, you might feel pressure to end tests early—but that can mess up your results.

Segmenting users (like testing just on mobile or return customers) shrinks your sample size even more. So plan for that before you start.

Power calculations can tell you how many visitors you actually need, based on your current conversion rates and goals.

Test Duration Problems

Picking the wrong test length leads to bad data. Short tests miss weekly traffic swings.

Weekly cycles hit every site differently. B2B might be slow on weekends, retail picks up on Fridays. Run tests for at least a full week to catch these trends.

Seasonal changes can mess with your data too. A test before a big holiday might look very different than one during a normal week. Always keep outside events in mind.

Stopping early is tempting when you see good numbers, but you haven’t hit significance yet. Waiting is tough, but it’s worth it to avoid false positives.

Running tests too long wastes time and resources. Once you hit your sample and significance, wrap it up and move on.

Dealing with Unexpected Results

Sometimes your results make no sense. Usually, that means something went wrong with setup, not that you’ve discovered a miracle. Weird results can lead to bad decisions if you don’t check them out.

Negative outcomes might just be tech issues or tracking problems, not a real drop in performance. Investigate any sudden drops right away.

Huge wins (like a 300% jump) are almost always a sign of an error somewhere. Double-check everything before you celebrate.

If your test is inconclusive, that’s still helpful—it means your change didn’t really move the needle for users.

Odd data spikes need a closer look. Things like traffic surges, outages, or ad campaigns during your test can throw off your results.

Advanced AB Testing Strategies

If you want to get more out of your AB testing, try targeting specific user groups, testing multiple things at once, or tying your experiments to bigger marketing goals. These advanced moves take more planning, but the insights are worth it.

Personalisation and Targeting

Segmenting your users can turn basic AB tests into powerful personalization tools. Try splitting audiences by demographics, behavior, or even purchase history.

Geographic targeting is great for global brands. You might run different headlines for UK vs. US visitors, matching local language and style.

Behavioral segmentation works even better. New visitors act differently than loyal customers, so tailor your tests accordingly.

Advanced AB testing platforms can handle these complex segments for you, crunching the numbers for each group.

Device-based targeting is becoming huge. Mobile users want quick, simple experiences. Desktop users usually stick around longer and read more.

Targeting ideas:

- Purchase history and spend

- Country or region

- Device and browser type

- Where the traffic came from (social, email, search)

- Time since last visit

Multivariate Testing Overview

Multivariate testing lets you look at how several page elements work together, instead of just testing one thing at a time. You can see how different pieces on a page play off each other.

Usually, you’ll test stuff like the headline, the main image, and maybe a call-to-action button, all at once. You end up with a bunch of combinations by mixing and matching versions of each element.

Example test structure:

- Headlines: A, B, C

- Images: 1, 2, 3

- Buttons: X, Y, Z

That gives you 18 different combos (3×3×2). Each visitor lands on one of these at random.

You’ll need a lot more traffic for multivariate tests. Every combo needs enough eyeballs to actually matter statistically. If you’re testing a bunch of elements, you might need thousands of visitors every day.

Multivariate tests really shine when you think elements might affect each other. Maybe a formal headline just fits better with a serious photo, while a casual headline feels right next to a relaxed image.

Digging through the results can get tricky, but the insights are worth it. You’ll spot which combos actually boost conversions, and you can use those wins in future designs.

Integrating AB Testing with Other Marketing Efforts

AB testing isn’t just for websites—it really ties together with email marketing, social media, and ads to make the whole user experience feel seamless. When your messaging matches up everywhere, you’ll usually see better conversion rates.

If you run AB tests on your emails, you can use those results to tweak your website copy. For example, if a subject line gets a lot of opens, why not try it out as a landing page headline? It’s a simple way to keep things consistent from the moment someone clicks to when they (hopefully) convert.

Social media ads can totally borrow from your website AB tests too. If a headline wins on your site, it’s probably worth testing as ad copy—after all, your audience already responded well to it.

Data-driven AB testing strategies can plug right into your CRM. That way, you get to test stuff based on things like how often someone buys or how much they’re worth as a customer.

Integration opportunities:

- Match your landing pages to your ad campaigns

- Send follow-up emails based on what people do on your site

- Use top-performing test elements in your retargeting ads

- Bring in social proof from other channels

Attribution tracking gets super important when you’re testing across different channels. You really need to know which touchpoints are making a difference and how your AB tests play into the bigger customer journey.

Try to sync your testing schedule with your marketing campaigns. If you’ve got a big product launch or a seasonal promo coming up, coordinate your tests across all channels so you get the most out of them.