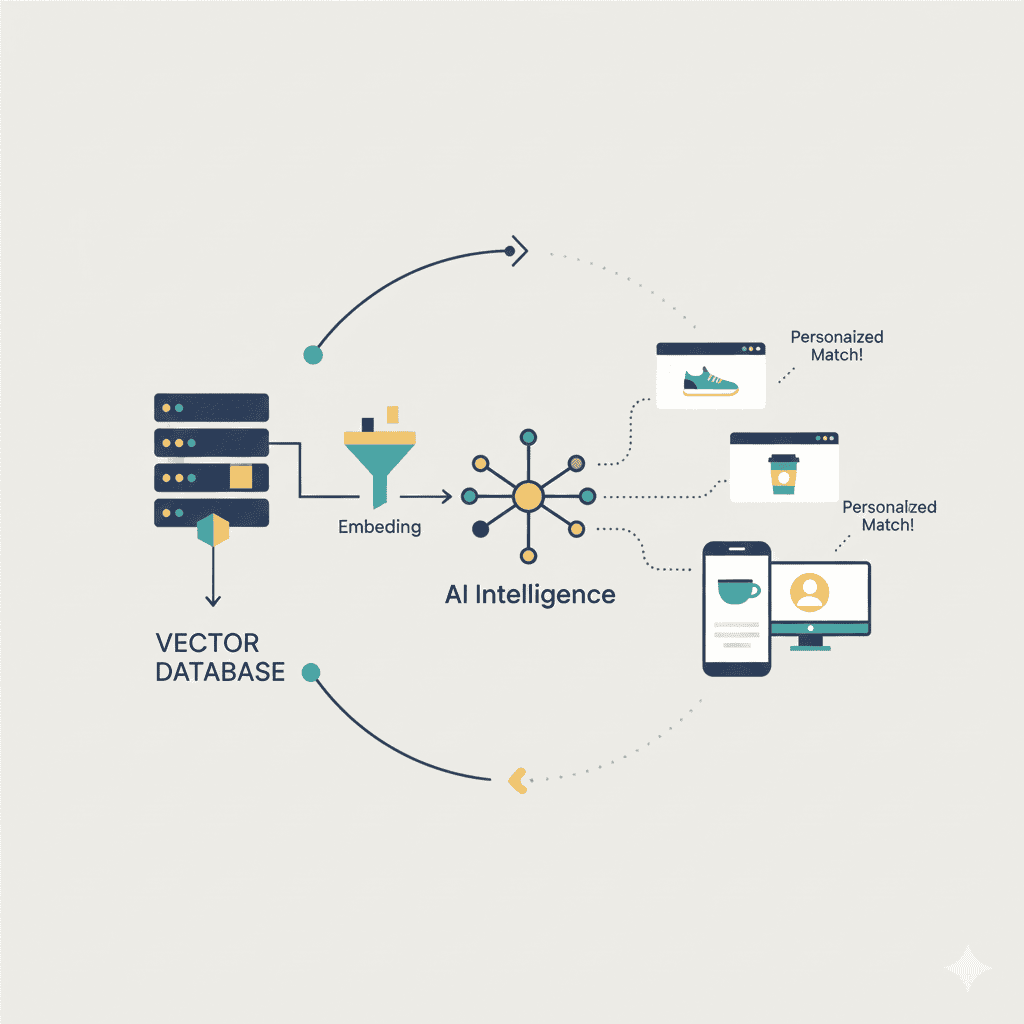

Vector databases are transforming how businesses deliver personalized ads through large language models (LLMs). These specialized databases store and process high-dimensional data representations that capture complex user preferences and behaviors.

By combining vector databases with LLMs for personalization, companies can create highly targeted advertising experiences that understand user intent and context with greater accuracy. The integration enables faster processing of vast amounts of user data while maintaining relevance and engagement.

Vector databases help solve key LLM challenges like outdated knowledge and memory limitations when processing user information for ads. This powerful combination allows for real-time personalization while keeping computational costs manageable.

Key Takeaways

- Vector databases enable faster and more accurate ad targeting through efficient data processing

- Integration of LLMs with vector search improves personalization while reducing computational costs

- Real-time processing of user preferences leads to more relevant advertising experiences

Understanding Vector Databases and LLMs

Vector databases and large language models work together to create powerful AI systems that can understand and process information in ways similar to human cognition. These technologies transform raw data into meaningful representations that machines can efficiently process.

Defining Vector Databases

Vector databases store and organize data as mathematical vectors, making it easy to find similar items quickly. These specialized databases turn information into high-dimensional vector spaces where related concepts appear closer together.

We use vector databases to store and retrieve information based on meaning rather than exact matches. This makes them perfect for AI applications that need to understand context and relationships.

Vector databases excel at similarity searches, which helps match user queries with relevant information. They can process millions of data points in milliseconds.

Exploring Large Language Models (LLMs)

LLMs process and generate text by analyzing patterns in vast amounts of training data. They can understand context, generate responses, and perform various language tasks.

LLMs are stateless systems, meaning they don’t retain information between conversations. This is where vector databases become essential – they provide permanent memory for LLMs.

These models excel at:

- Text generation

- Language translation

- Question answering

- Content summarization

Vector Embeddings and Semantic Meaning

Vector embeddings transform words and concepts into numerical representations that capture their meaning. These mathematical representations help machines understand language more like humans do.

Vector embeddings enable LLMs to process:

- Word relationships

- Context clues

- Semantic similarities

We can measure how similar two concepts are by calculating the distance between their vector representations. This allows machines to understand that “car” and “automobile” mean the same thing, even though they’re different words.

The combination of vector embeddings and semantic understanding helps create more accurate and context-aware AI systems.

Core Concepts: Vector Search and Indexing

Vector databases enable AI systems to find relevant content by converting data and queries into numerical representations that can be compared mathematically. These specialized databases use sophisticated indexing and search techniques to quickly match similar items.

Similarity Search and Query Vectors

When we search in a vector database, we convert our search query into a vector embedding – a string of numbers that captures the query’s meaning and characteristics. The database then compares this query vector to stored vectors using mathematical distance measures.

Two common similarity metrics we use are cosine similarity and Euclidean distance. Cosine similarity measures the angle between vectors, while Euclidean distance calculates the straight-line distance.

The search returns the most similar vectors based on these distance calculations. For example, searching for “summer clothing” would return vectors representing items like t-shirts and shorts.

Indexing Strategies in Vector Databases

Vector databases use specialized indexing to organize and quickly retrieve high-dimensional data. Tree-based indexing splits vectors into hierarchical groups based on similarity.

Hashing-based methods map vectors to “buckets” containing similar items. This significantly speeds up search by limiting comparisons to relevant buckets.

We can also use clustering techniques to group similar vectors together. This creates efficient indexes that can be searched much faster than scanning the entire database.

Approximate Nearest Neighbor (ANN) Search

ANN algorithms trade perfect accuracy for dramatic speed improvements. Instead of finding the exact closest matches, they find vectors that are “close enough.”

This approximation makes vector search practical for large-scale applications. Without ANN, searching millions of vectors would be too slow for real-time use.

Popular ANN approaches include:

- Locality-Sensitive Hashing (LSH)

- Hierarchical Navigable Small World graphs (HNSW)

- Product Quantization (PQ)

How LLMs Use Vector Databases for Ad Personalization

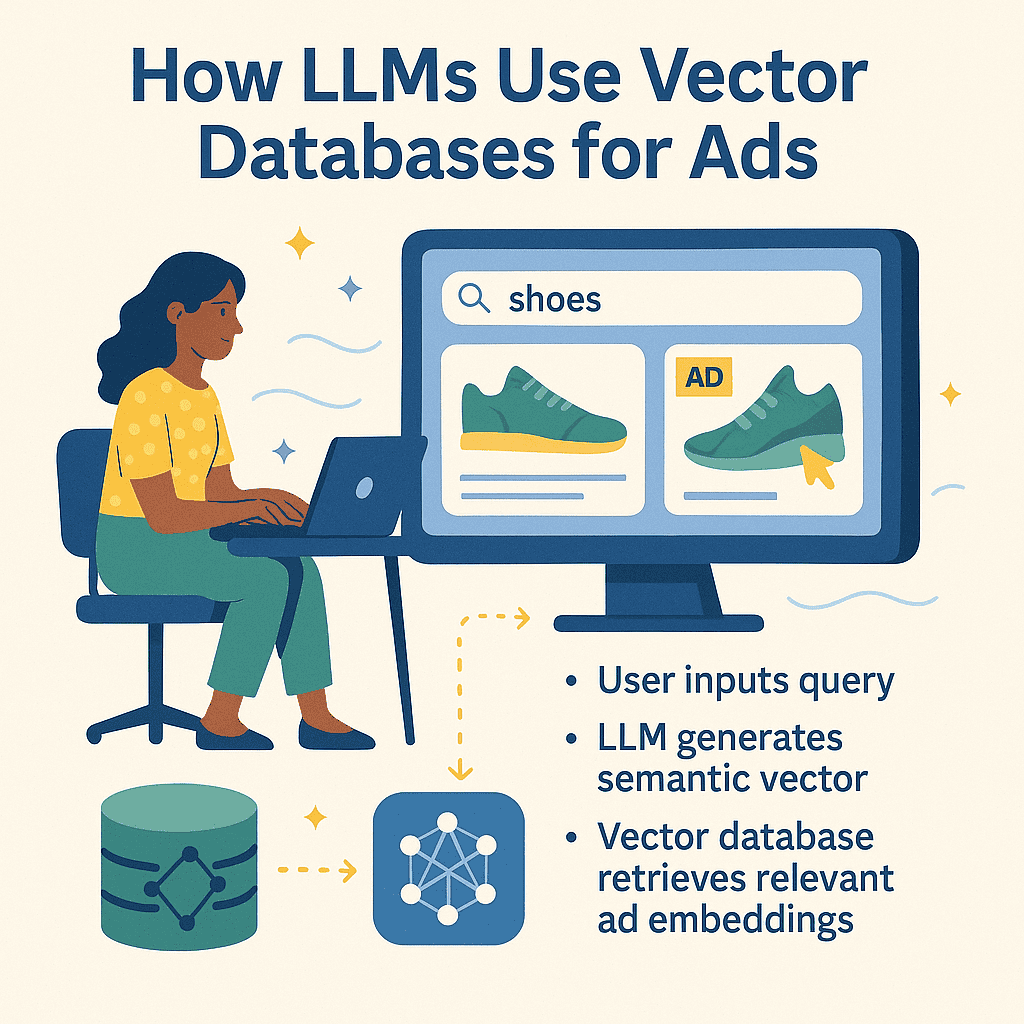

Vector databases and LLMs work together to create highly targeted advertising by analyzing user behavior patterns and content preferences. This technology enables real-time ad matching and dynamic content generation based on user interactions.

Personalized Recommendations with LLMs

Vector databases excel at similarity search, which helps match users with relevant ads based on their interests and behaviors. We store user profiles as vectors that capture preferences, browsing history, and past interactions.

LLMs analyze these vectors to identify patterns and generate personalized ad recommendations. For example, if a user frequently reads about hiking gear, the system will serve outdoor equipment ads.

The combination creates a powerful feedback loop:

- User interactions become vector embeddings

- LLMs process these vectors to understand preferences

- Ad recommendations improve through continuous learning

Retrieval-Augmented Generation for Targeted Advertising

RAG systems enhance ad targeting by combining stored knowledge with real-time content generation. We use vector databases to maintain an index of product information, ad copy, and customer response data.

When creating targeted ads, LLMs query the vector database to find relevant product details and successful ad examples. This improves ad copy accuracy and relevance.

The system generates customized ad variations by:

- Retrieving similar successful ads

- Adapting messaging to match user preferences

- Creating personalized calls-to-action

Integrating User Preferences in Ad Delivery

We track user engagement metrics and convert them into vector representations. These vectors help measure ad effectiveness and optimize delivery timing.

The infrastructure costs for this integration can be significant, but the improved targeting accuracy often justifies the investment.

Key metrics we monitor:

- Click-through rates

- Time spent viewing ads

- Purchase conversion rates

- Ad interaction patterns

The system adjusts ad delivery based on real-time performance data and user feedback signals. Each interaction refines the targeting model and improves future recommendations.

Key Components of Personalized Ad Systems

Modern ad personalization systems combine vector databases and LLMs to process vast amounts of user data and deliver targeted advertisements with high precision and relevance.

Data Collection: Structured and Unstructured Data

We gather structured data like purchase history, clicks, and demographic information from customer databases and CRM systems. This data follows clear patterns and is easy to organize.

Unstructured data includes social media posts, product reviews, and browsing behavior. These varied data types help create richer user profiles.

Key data points we collect:

- Website interactions

- Search queries

- Time spent on pages

- Device information

- Geographic location

Transforming Data with Embedding Models

Vector embeddings convert text, images, and user behaviors into numerical vectors that machines can process efficiently.

We use specialized embedding models to capture semantic meaning:

- Text embeddings for product descriptions

- Image embeddings for visual content

- User behavior embeddings for interaction patterns

These vectors enable similarity matching between user preferences and ad content.

Metadata Filtering for Tailored Results

We apply metadata filters to refine ad targeting based on specific criteria like age, location, and time of day.

Content personalization systems use these filters to:

- Match ads with demographic segments

- Apply budget constraints

- Ensure brand safety

- Control ad frequency

Metadata helps maintain targeting accuracy while respecting user preferences and privacy settings.

Popular Vector Databases and Technologies

Vector databases enable fast similarity search and efficient handling of high-dimensional data for AI applications. These specialized systems store and retrieve vector embeddings that power personalized ad recommendations and content matching.

FAISS

FAISS (Facebook AI Similarity Search) stands as Meta’s powerful open-source vector database library. We find it particularly effective for large-scale similarity search and clustering.

FAISS excels at indexing dense vectors, making it ideal for recommendation systems and image search. Its specialized algorithms can handle billions of vectors efficiently.

Key advantages include:

- Extremely fast similarity search operations

- Support for GPU acceleration

- Multiple indexing options for different use cases

- Easy integration with Python and C++

Pinecone

Pinecone offers a fully managed vector database built specifically for machine learning applications. We see it as a strong choice for production environments.

Notable features:

- Real-time updates and queries

- Automatic scaling and load balancing

- Built-in backup and recovery

- Military-grade security standards

The platform provides seamless integration with popular ML frameworks and maintains consistent performance even with high query loads.

Weaviate

Weaviate operates as a cloud-native vector database with powerful GraphQL support. We appreciate its modular architecture and flexibility.

Key capabilities include:

- RESTful API access

- Advanced filtering options

- Kubernetes compatibility

- Cross-reference data objects

The platform excels at combining traditional search with vector similarity, making it perfect for hybrid search applications.

Qdrant

Qdrant delivers production-ready vector similarity search with extensive filtering capabilities. We find its performance particularly impressive for real-time applications.

Core strengths:

- Fast vector indexing

- Rich filtering options

- Simple REST API

- Docker support

The database allows precise payload filtering during search operations, enabling complex queries while maintaining high performance.

Optimizing Ad Personalization Workflows

Vector databases combined with LLMs enable rapid retrieval of relevant ad content and seamless scaling of personalization systems. These optimizations help deliver targeted ads to millions of users with minimal latency.

Vector Indexing and Data Retrieval

Vector databases store and index high-dimensional data representations of ad content, user preferences, and behavioral patterns. This indexing enables fast similarity searches across massive datasets.

We recommend using approximate nearest neighbor (ANN) algorithms for efficient ad content matching. These methods trade perfect accuracy for dramatically improved search speed.

Key indexing strategies include:

- Hierarchical navigable small world (HNSW) graphs

- Inverted file indexes (IVF)

- Product quantization compression

The system must maintain separate indexes for different ad formats and targeting parameters. This separation helps narrow the search space and reduces query time.

Real-Time Updates and Scalability

Real-time data retrieval capabilities allow us to instantly incorporate new user interactions and campaign performance metrics. This immediate feedback loop improves targeting accuracy.

We can achieve horizontal scaling by:

- Distributing vector indexes across multiple nodes

- Implementing read replicas for high availability

- Using load balancers to manage query traffic

The architecture must handle concurrent updates while maintaining query performance. Modern vector databases use versioning and atomic operations to prevent conflicts.

Regular index rebalancing helps maintain optimal performance as data volumes grow. We recommend scheduling these operations during off-peak hours to minimize impact.

Challenges in Vector Database Integration with LLMs

Integrating vector databases with LLMs presents several technical hurdles that need careful consideration. The main challenges center around data handling, system performance, and security concerns.

Handling High-Dimensional Data

Vector databases face significant challenges when dealing with high-dimensional data from LLMs. We often encounter embedding vectors with hundreds or thousands of dimensions.

Traditional indexing methods become less effective as dimensions increase, leading to what we call the “curse of dimensionality.” This makes searching and retrieving relevant vectors much slower.

We can address this through dimensionality reduction techniques like PCA or t-SNE. These methods help compress the data while preserving important relationships between vectors.

Efficiency and Performance Optimization

Storing and processing billions of vectors requires sophisticated data structures and efficient algorithms. The main challenge lies in maintaining fast query speeds while scaling up the database size.

We need to optimize:

- Index structures for quick similarity searches

- Memory usage and caching strategies

- Query processing pipelines

- Data compression techniques

Real-time applications demand response times under 100ms, which requires careful tuning of these components.

Privacy and Anomaly Detection

Vector databases must protect sensitive information while maintaining utility. We implement encryption for stored vectors and secure transmission protocols.

Detecting anomalies in high-dimensional space presents unique challenges. Traditional outlier detection methods often fail with LLM embeddings.

We use specialized algorithms to:

- Monitor for unusual patterns

- Flag potential security breaches

- Identify data drift

- Validate input vectors

Regular auditing and updating of security measures helps maintain data integrity and system reliability.

Emerging Trends and Future Directions

Vector databases and LLMs are evolving rapidly to enhance ad personalization capabilities. New algorithms and techniques are making searches faster and more accurate while reducing computational costs.

Hierarchical Navigable Small World Graphs

HNSW graphs represent a major advancement in vector search efficiency. These graphs create multiple layers of connections between data points, enabling quick navigation through high-dimensional spaces.

The algorithm starts at the top layer with fewer nodes and moves down through denser layers until finding the most relevant results. This approach reduces search time dramatically compared to traditional methods.

We’ve seen significant improvements in ad targeting when using HNSW, with search times 50-100x faster than basic nearest neighbor searches. The method excels at finding similar products or user behaviors in large-scale advertising datasets.

Product Quantization Techniques

Advanced quantization methods compress high-dimensional vectors into smaller codes while maintaining search accuracy. This compression enables faster processing and reduced storage costs.

Product quantization divides vectors into smaller subspaces, each independently encoded. For ad systems, this means:

- Lower memory usage per user profile

- Faster similarity comparisons

- Reduced infrastructure costs

- Better scaling for millions of users

Advances in Retrieval Methods

New retrieval techniques combine traditional keyword search with semantic understanding. This hybrid approach improves ad matching accuracy by considering both exact matches and contextual relevance.

We’re seeing impressive results with adaptive indexing systems that automatically adjust to changing ad content and user behavior patterns. These systems learn from interaction data to optimize search paths.

The integration of approximate nearest neighbor (ANN) algorithms with contextual filters has reduced false positives in ad matching by up to 40%.

Real-World Applications and Case Studies

Vector databases combined with LLMs transform how businesses deliver personalized advertising experiences through advanced data processing and real-time recommendations. These technologies enable highly targeted interactions across multiple customer touchpoints.

Dynamic Product Recommendations

Vector databases power intelligent recommendation systems that analyze customer behavior patterns and purchase history in real-time. This creates more accurate product suggestions than traditional methods.

We’ve seen major e-commerce platforms use this technology to boost conversion rates by 25-40% through personalized product placements.

The system processes both structured data (purchase history, clicks) and unstructured data (product descriptions, reviews) to create comprehensive user profiles.

Key benefits include:

- Real-time recommendation updates

- Better cross-selling opportunities

- Reduced cart abandonment rates

- More relevant upsell suggestions

AI-Powered Search and Content Generation

Modern search capabilities now understand user intent beyond simple keyword matching. GPT-4 and similar LLMs analyze search context to deliver personalized ad copy and creative content.

Content generation systems can automatically:

- Create tailored ad headlines

- Generate product descriptions

- Adapt messaging for different audiences

- Produce localized content variations

The combination creates a powerful system for serving the right content to the right users at the right time.

Chatbots and Conversational Personalization

LLMs integrated with vector databases enable chatbots to maintain context and provide relevant responses based on user interactions and preferences.

These AI-powered assistants can:

- Recommend products during conversations

- Answer product-specific questions

- Guide users through purchase decisions

- Provide personalized support

The systems learn from each interaction to improve future recommendations and create more natural conversation flows.

Recent implementations show a 30% increase in customer engagement when using these personalized conversational approaches.

Best Practices for Implementing LLMs and Vector Databases in Ad Personalization

Proper implementation techniques and data management strategies make the difference between mediocre and exceptional ad personalization results. These practices help maximize accuracy while maintaining efficiency.

Prompt Engineering for Targeted Advertising

We recommend creating specific templates for different ad categories and audience segments. Each template should include clear instructions about tone, style, and key messaging points.

Testing prompts with small audience samples before full deployment helps identify potential issues early. We suggest using A/B testing to compare different prompt variations.

Key prompt elements to include:

- Target audience demographics

- Product features and benefits

- Previous engagement history

- Brand voice guidelines

Advanced indexing techniques enhance the accuracy of ad targeting by improving semantic search capabilities.

Managing Data Quality and Integrity

Regular data cleaning and validation processes ensure accurate personalization. We need to check for outdated customer information and remove irrelevant vectors.

Essential data management steps:

- Daily database maintenance

- Regular vector re-indexing

- Automated data validation checks

- Privacy compliance monitoring

Vector database integration requires careful attention to data freshness and quality standards.

Real-time monitoring helps catch data inconsistencies quickly. We should set up alerts for unusual patterns or potential data quality issues.

Frequently Asked Questions

Vector databases transform ad personalization by enabling real-time semantic search and context-aware targeting through efficient storage and retrieval of high-dimensional embeddings. These systems work alongside LLMs to process customer data, behavior patterns, and content preferences at scale.

What is the role of vector databases in enhancing ad personalization with large language models?

Vector databases act as specialized storage systems that index and retrieve embedding vectors used by LLMs for understanding user preferences and behaviors. They enable fast similarity searches across millions of data points to find relevant ad content.

The integration allows for real-time personalization by matching user queries and profiles with appropriate ad content based on semantic meaning rather than just keywords.

How does vector representation in LLMs improve targeted advertising?

Vector representations capture subtle relationships between users, products, and ad content by converting text and user data into mathematical spaces where similar items cluster together.

These numerical representations help identify patterns in user behavior that may not be obvious through traditional targeting methods.

Which open-source vector databases are best suited for integration with large language models?

Milvus and Weaviate excel at handling the high-dimensional vectors produced by modern LLMs while maintaining fast query speeds needed for ad serving.

Qdrant offers robust filtering capabilities that help narrow down relevant ads based on multiple criteria simultaneously.

Can vector databases and LLMs be effectively combined for question answering and information retrieval in advertising?

Vector databases significantly enhance LLM capabilities by providing efficient storage and retrieval of contextual information for ad-related queries.

This combination enables natural language interactions with advertising systems while maintaining quick response times essential for real-time bidding.

What are the architectural considerations when using vector databases with LLMs for ad personalization?

The system architecture must balance query speed with accuracy, typically using approximate nearest neighbor search algorithms.

Data indexing strategies need careful planning to handle continuous updates of user profiles and ad content while maintaining performance.

How does AWS’s vector database service facilitate LLM use in personalized advertising campaigns?

AWS vector database services provide scalable infrastructure for storing and querying high-dimensional embeddings generated from user data and ad content.

The cloud-based solution integrates seamlessly with other AWS services for ad serving and analytics.

This makes it easier to deploy personalized campaigns at scale.