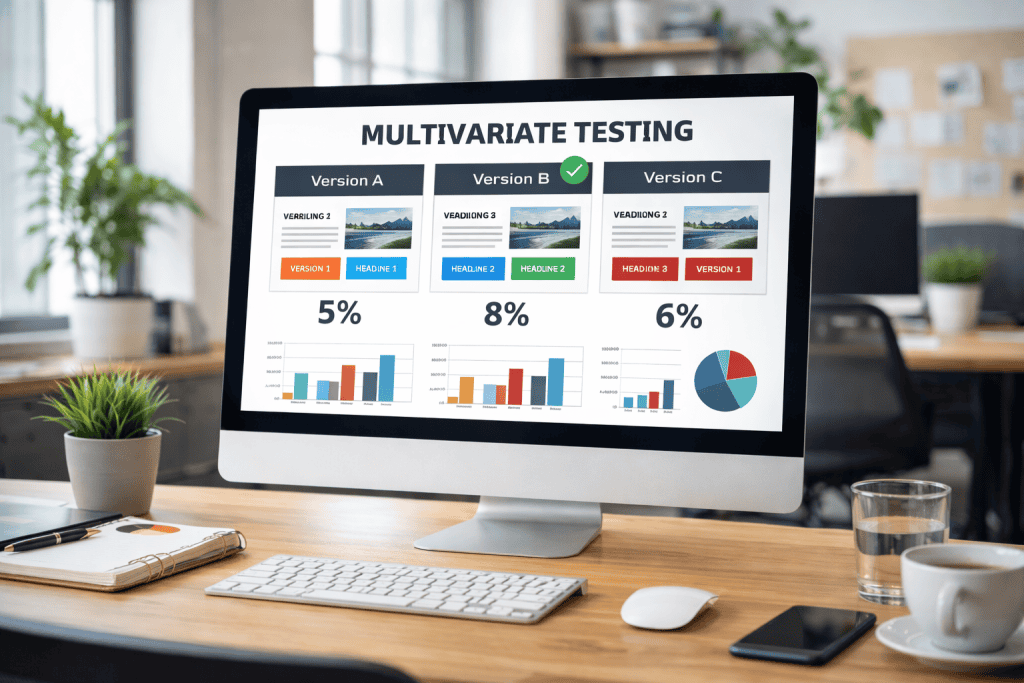

Testing different versions of websites and marketing campaigns is now a must for any business hoping to boost results. AB multivariate testing takes the straightforwardness of A/B testing and ramps it up, letting you check out several elements at once. That means you get a deeper read on what actually sparks conversions.

A lot of companies get stuck trying to figure out which changes actually make customers do something. A/B testing just looks at two versions of one thing, but multivariate testing dives into how different combos of stuff work together.

This approach doesn’t just tell you what’s working—it helps you figure out why.

If you pick the right testing strategy, you can totally transform your marketing results. But deciding which method to use? That takes a bit of understanding about what each one does best (and where it falls short).

When you get the hang of AB multivariate testing—from setup to making sense of complicated results—you can optimise the whole customer journey, not just tweak a button or two.

What Is AB Multivariate Testing?

AB multivariate testing mixes the classic A/B approach with a multivariate twist, letting you see how different webpage elements work together.

This kind of test helps businesses spot which combos of changes actually make for the best user experience and conversion rates.

Definition of AB Multivariate Testing

AB multivariate testing is basically a way to test several variables at the same time so you can see how different combinations affect what users do.

Unlike a basic A/B test that just compares two versions, this approach throws multiple elements into the mix all at once.

You’ll end up with a bunch of webpage versions by tweaking things like headlines, images, buttons, and text blocks—sometimes all at the same time.

What stands out:

- You’re testing more than one thing at once

- You can spot how different parts play off each other

- You get to look at every possible combo of changes

- You’ll need more traffic than you would for regular A/B tests

This strategy helps you find the best mix of elements, not just which single tweak works. It’s pretty cool for seeing how one part of your page can boost (or drag down) another.

Differences Between AB Testing and Multivariate Testing

A/B testing checks out two versions of one thing, but multivariate testing throws lots of elements into the ring together.

These methods have different uses and need different resources.

A/B Testing:

- Only changes one thing at a time

- Needs fewer people to participate

- Results come in fast

- Great for simple tweaks

Multivariate Testing:

- Changes a bunch of things at once

- Needs way more visitors

- Takes longer to finish up

- Shows how stuff works together in a more complicated way

Go with A/B testing if you’ve got simple changes and not much traffic. Multivariate is better if you want to see how different elements interact.

It really comes down to your goals, how much traffic you get, and what resources you have.

Core Concepts and Terminology

Variables are just the different parts of your page you want to test, like headlines or buttons. Each variable can have a few variations—so maybe three different headlines or two button colours.

Combinations happen when the system mixes and matches your variations. If you’ve got two headlines and three button colours, you’ll end up with six combos.

Traffic split means you divide your website visitors between all those combos. If you’ve got six, each one gets about 16.7% of the people.

Statistical significance is just a fancy way of saying the results are probably real, not just random luck. Most folks wait for a 95% confidence level before picking a winner.

Interaction effects are when one change (like a red button) works great with one headline but flops with another. This is where you really start to see what’s worth keeping.

How AB Multivariate Testing Works

AB multivariate testing lets you check out a bunch of variables at once, but you’ve got to plan carefully if you want results that actually make sense.

You need to set clear goals and choose variables that matter, or you’ll just end up with a mess of confusing data.

Test Design and Planning

Start by picking the webpage (or section) you want to improve. Figure out if you want to test just one thing at a time or see how a few elements interact.

You’ll need to crunch some numbers to figure out how many visitors you’ll need based on your current traffic and conversion rates.

If your site gets a lot of visitors, you can handle more complex tests. Smaller sites? Maybe keep it simple.

Set up a timeline that takes into account busy seasons or slow weeks. Most tests should run for at least a week or two, just to catch different user habits.

Don’t forget:

- Check your current conversion rates and traffic

- Decide what counts as “significant” (usually 95% confidence)

- Think about timing and outside factors

- Make sure you’ve got the tech and people to pull this off

You’ll want to assign developers, designers, and analysts to handle the setup and keep an eye on things.

Setting Experiment Goals

If you don’t know what you’re aiming for, you’ll never know if you got there. Teams should pick a main metric before launching any test—maybe conversion rate, click-through rate, or revenue per visitor.

Secondary metrics help you see the bigger picture, like bounce rate or time on page.

Set goals that are clear and measurable. Instead of just “improve performance,” try “get 15% more email signups” or “cut checkout abandonment by 10%.”

A few good primary goals:

- Boost purchase conversions

- Get more email signups

- Improve form completion rates

- Increase click-throughs

Most folks stick with a 95% confidence level before making any big changes.

Implementation Strategies

If you want your tests to work, you’ve got to pick the right tools, make sure everything connects to your analytics, and collect data that’s actually reliable.

Choose a platform that fits your needs—no sense paying for a rocket ship if you just need a bicycle.

Choosing the Right Tools and Platforms

Testing platforms come in all shapes and sizes. Google Optimize gives you the basics for free, which is great for smaller businesses.

If you need more muscle, Optimizely and VWO have some pretty slick multivariate features.

Think about:

- How much traffic you’ve got

- How tricky it’ll be to set up

- Your budget

- What your team knows how to use

Big sites need platforms that handle lots of data fast. Smaller sites might want tools that can pick up on changes even with fewer visitors.

If your team isn’t super technical, drag-and-drop tools are a lifesaver. Developers might prefer something they can really customise.

Budget matters, too. Free tools usually have limits, while paid ones offer more features and flexibility.

Integrating With Analytics Systems

You’ll want your testing data to show up in whatever analytics platform you already use. Google Analytics is super common and makes it easy to see your test results next to other important numbers.

Here’s what you’ll need to do:

- Set up goal tracking in your analytics

- Add custom dimensions for each test variation

- Turn on cross-domain tracking if you need it

- Double-check that everything works before you start the test

Make sure you’re tracking every key user action. E-commerce? Track purchases. Lead gen? Track form fills. Content? Look at engagement.

Custom segments can help you see how different groups (like mobile vs. desktop users) react to your changes.

Keeping your data consistent across platforms is a big deal. Run regular checks to make sure your tracking codes are working and numbers match up.

Ensuring Robust Data Collection

If your data’s messy, your results will be, too. Figure out how many visitors you need before you start—usually at least 1,000 per variation.

Don’t skip these:

- Filter out bots

- Prevent duplicate users

- Watch your statistical significance

- Keep notes on anything weird that might mess with results

Wait until you hit 95% confidence before picking a winner. Ending tests too soon can lead you astray, but running them forever isn’t smart either—seasonal stuff can throw things off.

If there’s a big sale, a holiday, or your site goes down, jot it down. Those things can mess with your numbers.

Check your data regularly. If your test platform and analytics don’t match up, fix it before things get out of hand.

Analysing AB Multivariate Test Results

When it’s time to look at your results, you’ll need to check if they’re statistically sound, figure out what they mean, and share your findings in a way people actually get.

You’ll be calculating confidence levels, looking at how variables interact, and turning all that data into something useful.

Statistical Significance and Confidence

Statistical significance tells you if your results are real or just random. For multivariate tests, you’ll want a 95% confidence level.

You need enough people in your test to trust the results. Each extra variable means you’ll need even more traffic.

Watch these numbers:

- P-value: Below 0.05 is good

- Confidence interval: Shows your range of likely outcomes

- Statistical power: Over 80% is best

Multivariate tests chew through more traffic than A/B tests. Every new variable multiplies your sample size needs.

Data pros usually say to keep tests running until you hit the significance mark. If you stop too soon, you risk making the wrong call.

Type I errors (false positives) and Type II errors (missed real changes) are always something to watch out for.

Interpreting Outcomes

You’ll often see that elements work together in ways you wouldn’t expect. Sometimes, the best combo isn’t made up of the individually top-performing parts.

Look for:

- Positive interactions: Two changes make each other better

- Negative interactions: One change messes up another

- Neutral interactions: No effect either way

Main effects show what each variable does on its own, but interactions tell you how they play together.

Multivariate analysis checks all combos at once, so you can spot patterns you’d miss with single A/B tests.

Conversion lift shows you the percentage boost from a combo. Comparing these lifts helps you decide what’s really working.

Statistical models can point out which variables matter most, so you know where to focus next.

Reporting Insights

The best reports turn a sea of data into clear takeaways. Point out which combo won and why.

Include:

- How long the test ran and how many people saw it

- The winning combo and its confidence level

- How much conversion rates improved

- Any impact on revenue

Charts and graphs help people see the story at a glance. Show how different variables interacted—it’s way easier to understand that way.

Your recommendations should spell out what to do next. If there’s technical work needed, mention it and give a rough timeline.

Connect your findings to business goals. Don’t just talk stats—show how it helps the bottom line or user experience.

Keep track of what didn’t work, too. You’ll learn just as much from the losers as the winners.

An action plan helps make sure you actually use what you learned. Otherwise, all that testing goes to waste.

Best Practices for AB Multivariate Testing

If you want your AB multivariate tests to actually help, you’ll need to plan out sample sizes, cut down on bias, and run your tests for just the right amount of time.

Nail these basics, and you’ll get insights you can actually use for smarter data-driven decisions.

Sample Size Determination

Sample size really makes or breaks how much you can trust your test results. If you run tests with too few people, you’ll probably end up with sketchy data and bad decisions.

Minimum participants needed:

- Simple A/B test: 1,000-5,000 per variant

- Multivariate test: 10,000-50,000 total participants

- Complex multivariate test: 100,000+ participants

The more variants you add, the more people you need. Every new variant in multivariate testing needs more participants than a basic A/B test.

Key factors for sample calculation:

- Your current conversion rate

- How much improvement you expect

- Statistical confidence level (95% is the usual)

- Number of variants you’re testing

Statistical power calculators are super handy. They’ll tell you exactly how many folks you need for each variant, so you don’t have to guess.

If your site gets a lot of traffic, you can try more complex tests. But if you’re on the lower-traffic side, stick with simple A/B tests for now.

Minimising Bias and Errors

Bias can totally mess up your results and send you in the wrong direction. There are a few common types that pop up in AB and multivariate testing.

Random assignment helps dodge selection bias. Everyone should have the same chance to see each variant. Use good randomisation tools instead of assigning people yourself.

Seasonal bias is sneaky. If you test during holidays, sales, or weird traffic spikes, you’ll get results that don’t reflect normal behaviour.

Sample ratio mismatch usually means something’s broken. Each variant should get about the same amount of traffic. If not, you might have a tracking or setup issue.

| Bias Type | Prevention Method |

|---|---|

| Selection | Random visitor assignment |

| Seasonal | Test during normal periods |

| Technical | Monitor traffic distribution |

| Novelty | Run tests for full cycles |

Novelty effects are real. New designs often look great at first, but that might fade. Wait a bit before making any calls.

Check your data every day while the test runs. If you spot weird patterns or traffic drops, dig in and see what’s up.

Test Duration Optimisation

How long you run a test actually matters a lot. Too short, and your data’s weak. Too long, and you’re just wasting time.

Minimum duration requirements:

- Simple tests: 1-2 weeks minimum

- Multivariate tests: 2-4 weeks minimum

- Complex tests: 4-8 weeks for reliable data

Try to run tests through a full business cycle. You want to catch both weekday and weekend behaviour.

Don’t just stop when you hit statistical significance. You also need enough people in each variant to trust the outcome.

Stop tests when you’ve got:

- 95% statistical confidence

- Minimum sample size for each variant

- At least one full business cycle

- A clear winner

Try not to peek at the results too often. Checking results a lot can lead to false positives and mess with your decision-making.

Sometimes outside stuff—like marketing pushes or competitor changes—means you need to extend or restart your test.

It’s usually better to check test performance weekly, not daily. This helps you avoid jumping the gun because of early trends.

Common Challenges and Solutions

Multivariate testing isn’t always a walk in the park. The biggest headaches usually come from handling complicated test setups, getting enough people to participate, and making sure your variables don’t mess each other up.

Handling Test Complexity

Things get complicated fast when you add more variables. Let’s say you test 3 elements with 2 versions each—you’re suddenly tracking 8 combos.

Design Simplification Strategies:

- Stick to 2-3 key variables at most

- Focus on what could really move the needle

- Try fractional factorial designs to keep things manageable

Too many variations split your traffic so thin that it’s tough to spot a real winner.

Implementation Solutions:

- Start with A/B tests if you’re new to this

- Keep track of all your test variations

- Use platforms that can handle complexity for you

Go after variables that change user behaviour. Testing button colour and page layout at the same time can get messy and might not tell you much.

Dealing With Insufficient Data

You need a lot of people for multivariate tests to mean anything. Each extra variable means you need even more traffic.

Sample Size Requirements:

- Basic A/B test: 1,000+ conversions per variation

- 4-variation multivariate: 4,000+ conversions minimum

- Complex tests: 10,000+ conversions often needed

A lot of sites just don’t get enough visitors for big tests. If you have under 100,000 monthly visitors, you’ll probably struggle to get solid data.

Data Solutions:

- Run tests longer to gather more info

- Test only on your highest-traffic pages

- Try sequential A/B tests instead of all-at-once multivariate

- Combine similar audience segments to boost sample size

Always figure out how many people you need before you start. Otherwise, you might waste weeks on a test that can’t tell you anything useful.

Mitigating Overlapping Effects

Variables in multivariate tests can mess with each other in ways you don’t expect. Maybe a red button does great with one headline but tanks with another—it happens.

Common Interaction Problems:

- Visual elements fighting for attention

- Headlines and CTAs sending mixed messages

- Layout changes making stuff hard to see

Multivariate testing checks multiple elements at once to see how combos work. But sometimes, these interactions hide what’s actually working.

Mitigation Techniques:

- Test related elements together (like headlines and subheadings)

- Put unrelated variables in separate tests

- Use full factorial designs if you care about interactions

- Look at both individual elements and combinations

Digging into the stats can help you spot real interaction effects. Focus on combos that actually boost your numbers across the board.

Applications in Digital Marketing

Marketers love A/B and multivariate testing for dialing in campaign performance. These tests help you make decisions based on data, not hunches.

Email Marketing Campaigns

Try out different subject lines, send times, and layouts. A/B tests pit two emails against each other, but multivariate testing lets you test lots of changes at once.

Website Optimisation

Tweak landing pages, product pages, or checkout flows. Changing up headlines, button colours, or image spots can really move your conversion rate.

Social Media Advertising

Platforms let you test ad copy, images, and targeting. You can figure out which combos get the most clicks for the least cash.

| Marketing Channel | Common Test Elements | Primary Goal |

|---|---|---|

| Subject lines, send times, content | Open rates, click-through rates | |

| Website | Headlines, buttons, layouts | Conversion rates |

| Social Ads | Copy, images, targeting | Engagement, CPA reduction |

| PPC Campaigns | Ad text, keywords, landing pages | Click-through rates, quality scores |

Pay-Per-Click Campaigns

Google Ads and similar tools let you test ad text, keywords, and landing pages. It’s all about boosting your quality score and cutting advertising costs.

Smart marketers use these methods to save big on campaigns. Some even cut their cost per acquisition by over 60% just by testing smarter.

Emerging Trends in AB Multivariate Testing

AI-powered experimentation is shaking things up. Machine learning now predicts winning variations before tests even finish, so you get answers faster.

Real-time personalisation is getting big. Websites swap out content on the fly based on what users do, so everyone sees the version most likely to convert.

Data-driven decision making keeps leveling up. New analytics tools dig deeper into how variables interact, so you can spot patterns you’d never have noticed before.

Mobile-first testing is on the rise. More teams now focus on mobile experience first, since that’s where most users are hanging out.

Cross-platform experimentation lets you test stuff across email, web, and apps all at once. This way, your messaging stays consistent everywhere.

| Trend | Benefit |

|---|---|

| AI Integration | Faster results prediction |

| Real-time Personalisation | Instant optimisation |

| Advanced Analytics | Better insights |

| Mobile-first | Improved mobile experience |

| Cross-platform | Consistent messaging |

Automated test creation makes life easier. Now, even folks who aren’t super technical can set up complex tests with a few clicks.

Privacy-focused testing is also growing. New methods help you test while keeping user data safe and staying compliant with privacy rules.

The future of multivariate testing is all about speed and smart insights. Tests will get faster, and you’ll get more useful answers than ever.

Conclusion

So, here’s the deal: A/B testing is perfect if you’re making basic tweaks or running a smaller site. You just pit two versions of something—like a headline or button—against each other and see what wins.

Multivariate testing, though, feels more like a deep dive. It’s what you’d use if you want to play around with several elements at once. But you’ll need a lot more traffic to get real answers.

Which one should you pick? It really comes down to these three things:

| Factor | A/B Testing | Multivariate Testing |

|---|---|---|

| Traffic Volume | Low to medium | High |

| Complexity | Simple changes | Multiple elements |

| Resources | Fewer required | More extensive |

If you’re just testing a headline or a button and don’t have a ton of visitors, A/B testing makes way more sense.

But if your site gets a steady flood of visitors, multivariate testing can show you how different parts of your page interact. A/B testing might miss those details.

Budget and timeline matter, too. A/B tests usually wrap up faster and cost less than the big multivariate projects.

Honestly, mixing both approaches can work great. Teams often kick things off with A/B tests to nail the big stuff, then switch to multivariate testing to fine-tune the details (here’s a resource).

Whatever you pick, you’ll need enough data to be sure your results mean something. That’s non-negotiable.

Just make sure your testing matches your business goals and the resources you’ve got. That’s how you get the best results.