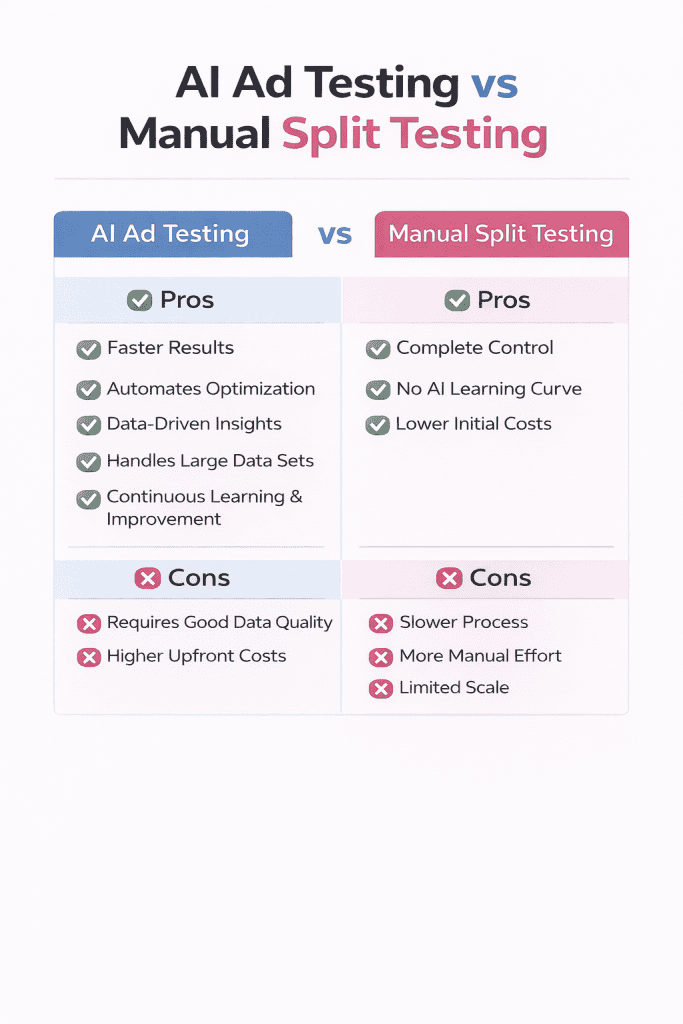

Digital advertising has evolved rapidly with the rise of artificial intelligence. While traditional manual split testing has been the go-to method for many marketers, AI-driven optimization offers a new approach to testing ad performance and improving results.

The key difference between AI and manual testing lies in automation and scale – AI can analyze multiple variables simultaneously and make real-time adjustments, while manual testing requires individual setup and monitoring of each test variant. Manual management often provides more hands-on control over testing parameters, making it valuable for specific campaign objectives and smaller-scale testing.

We see both methods having distinct advantages in modern advertising. AI excels at processing large amounts of data and identifying patterns humans might miss, while manual testing allows for more nuanced creative decisions and strategic adjustments based on market knowledge.

Key Takeaways

- AI testing analyzes multiple variables simultaneously while manual testing focuses on controlled individual changes

- Manual testing provides greater control over creative elements and testing parameters

- AI-powered testing reduces time investment and scales testing capabilities across large campaigns

Understanding Ad Testing Approaches

Effective ad testing helps businesses improve their marketing performance through data-driven decisions. Careful measurement of results is essential.

Overview of Split Testing

A/B testing divides an audience into groups to test different ad versions against each other. We change one variable at a time to measure its specific impact on performance.

The key elements of split testing include:

- Ad creative variations

- Target audience segments

- Performance metrics

- Statistical significance

Testing helps us identify which ad elements resonate most with our audience. We can test headlines, images, copy, calls-to-action, and other components.

What Is AI Ad Testing?

AI-powered testing tools automate the creation and analysis of ad variations. These systems use machine learning to rapidly test multiple creative options.

Benefits of AI testing:

- Speed: Tests run simultaneously across many variations

- Scale: Handles large amounts of data efficiently

- Pattern recognition: Identifies subtle performance trends

- Real-time optimization: Adjusts campaigns automatically

Traditional Manual Split Testing

Manual testing approaches rely on human expertise to design, implement and analyze test results. Marketing teams create distinct ad versions and monitor their performance.

Key aspects include:

- Direct control over test variables

- Human insight into audience behavior

- Flexible testing parameters

- Detailed qualitative analysis

We carefully track metrics like click-through rates, conversions, and engagement to determine winning variations. Manual testing works well for smaller campaigns where deep human understanding is valuable.

Core Differences Between AI Ad Testing and Manual Split Testing

AI and manual testing each bring unique strengths to ad testing. The choice between them impacts speed, accuracy, and the types of insights we can uncover.

Human Expertise Versus Artificial Intelligence

Manual split testing relies on human judgment and creativity to spot nuanced issues and opportunities. Testers can interpret subtle context and user behavior that AI might miss.

AI testing uses machine learning and natural language processing to analyze patterns across massive datasets. This allows for quick identification of trends and anomalies.

Human testers excel at understanding emotional responses and cultural factors in ad performance. They can make intuitive decisions based on market knowledge and experience.

AI systems work 24/7 without fatigue, processing thousands of ad variations simultaneously. They eliminate human bias and maintain consistent testing parameters.

Test Case Generation and Execution

Automated systems can rapidly generate hundreds of test cases based on predefined rules and parameters. They modify elements like:

- Headlines and copy variations

- Image combinations

- Call-to-action placement

- Color schemes

- Layout alternatives

Manual testers create thoughtful, targeted test cases based on marketing strategy and audience insights. They focus on quality over quantity.

The execution speed differs dramatically. While humans need time to run and document each test, AI systems can test multiple variants instantly.

Data Processing and Analysis

AI excels at processing vast amounts of testing data to identify winning ad combinations. Machine learning algorithms can predict performance patterns and recommend optimizations.

Split testing analytics through AI provide:

- Real-time performance metrics

- Statistical significance calculations

- Audience segment analysis

- Automated reporting

Manual analysis allows for deeper interpretation of results within business context. Human analysts can connect data points to broader marketing goals and customer behavior patterns.

Teams can spot unexpected insights that automated systems might overlook. This human touch helps translate raw data into actionable strategies.

Testing Process and Methodology

AI and manual testing each require specific processes to ensure accurate results. Testing methods must follow structured approaches to generate reliable data for making informed decisions.

Control and Variation Groups

Traditional A/B testing methods split audiences into distinct groups to compare performance. The control group represents the baseline ad or current best performer.

We keep this group unchanged throughout the test period. Variation groups test new elements like different headlines, images, or ad copy.

For accurate results, we change only one element at a time. AI-driven testing systems can handle multiple variations simultaneously, adjusting test parameters in real-time based on performance data.

Metrics and KPIs

Common performance metrics include:

- Click-through rate (CTR)

- Conversion rate

- Cost per acquisition (CPA)

- Return on ad spend (ROAS)

We track these metrics through:

- Daily monitoring

- Weekly trend analysis

- Statistical significance checks

Each test needs a primary KPI to determine success. Secondary metrics help provide context but shouldn’t influence the main decision.

Test Coverage and Edge Cases

Automated testing systems can test more scenarios than manual methods.

Key areas we test include:

- Different devices and platforms

- Various audience segments

- Time-of-day performance

- Geographic variations

Edge cases require special attention:

- Holiday periods

- Extreme weather events

- Market disruptions

- Platform updates

We maintain detailed logs of all test conditions and anomalies to ensure comprehensive coverage.

Elements Tested in AI and Manual Split Testing

Split testing lets us measure how different elements affect user behavior and conversion rates. Both AI-powered and manual testing approaches help optimize key components of digital marketing campaigns.

Ad Copy and Messaging

Split testing ad content helps us identify which messages drive the best results. We can test different value propositions, tone of voice, and word choice.

AI testing tools analyze large amounts of text variations quickly to find winning combinations. This includes testing formal vs casual language, short vs long copy, and different emotional appeals.

Manual testing gives us more control over nuanced messaging elements. We carefully craft variations to test specific hypotheses about what resonates with our audience.

Headlines and Subject Lines

Strong headlines and subject lines grab attention and boost open rates. We use AI to analyze patterns in high-performing headlines across industries.

The AI can rapidly test dozens of variations to identify the most effective headline formulas. Key elements we test include:

- Length (short vs long)

- Numbers vs no numbers

- Questions vs statements

- Power words and emotional triggers

CTAs and Call-to-Action Buttons

Effective CTAs drive users to take desired actions. We test multiple elements including:

Button Text:

- Action verbs (“Get” vs “Start” vs “Join”)

- Urgency phrases (“Now” vs “Today”)

- Value propositions

Visual Elements:

- Button colors and contrast

- Size and placement

- Shape and border styles

AI testing can quickly analyze which CTA combinations generate the highest click-through rates across different audience segments.

Evaluating Outcomes: User Behavior and Conversion Metrics

AI-powered testing helps us measure and analyze key performance indicators with greater precision than traditional manual methods. Proper evaluation of these metrics gives us clear insights into campaign effectiveness and return on investment.

User Experience and Engagement

User behavior analysis shows us how visitors interact with different versions of our content. We track metrics like:

- Time spent on page

- Scroll depth

- Number of pages visited

- Interactive elements clicked

AI testing platforms can automatically collect and analyze these engagement signals. This removes human bias from the interpretation process.

We measure user satisfaction through direct feedback mechanisms like surveys and indirect signals like repeat visits. These indicators help us understand which content variations create the best experience.

Conversion Rate and ROI

A/B testing directly impacts our bottom line through improved conversion rates. Key metrics include:

- Sales conversions

- Lead form submissions

- Email signups

- Average order value

We calculate ROI by comparing the cost of implementing changes against the revenue gains from improved conversion rates. AI helps us identify winning variations faster than manual testing.

Small improvements in conversion rates often lead to significant revenue increases when applied across large traffic volumes.

Bounce Rate and Click-Through Rate (CTR)

These engagement metrics tell us if our content keeps visitors interested.

Bounce Rate Indicators:

- Single-page sessions

- Quick exits

- No interactions

CTR Measurements:

- Email opens

- Ad clicks

- Internal link clicks

- Call-to-action button clicks

AI testing tools help us spot patterns in user behavior that affect these metrics. We can quickly identify and fix elements causing high bounce rates or low CTRs.

Advantages of AI Ad Testing

AI brings powerful automation and data analysis capabilities to advertising that make manual testing look slow and limited. AI systems process vast amounts of data to optimize ad performance while freeing up human resources for strategic work.

Speed and Scalability

AI algorithms can automatically generate and test hundreds of ad variations simultaneously across multiple platforms and audience segments. This rapid testing at scale helps identify winning combinations quickly.

We can test different headlines, images, copy and CTAs across diverse demographics in hours instead of weeks. The AI continuously monitors performance metrics and makes real-time adjustments.

The automated nature means we can run tests 24/7 without human intervention. When market conditions or audience behaviors change, AI adapts the testing parameters automatically.

Resource Allocation and Efficiency

AI-driven testing reduces manual work by automating repetitive tasks like data collection, analysis, and reporting. This frees up marketing teams to focus on strategy and creative development.

The systems automatically allocate budget to better-performing ad variants. Poor performers get identified and paused quickly to prevent wasted spend.

Testing costs decrease significantly since fewer human hours are needed. One AI system can do the work of multiple manual testers while maintaining consistent quality.

Predictive Analysis and Personalization

AI analyzes historical performance data to predict which ad elements will resonate with specific audience segments. These insights help create more targeted initial variations.

Visual validation testing through AI ensures ads display correctly across devices while identifying what visual elements drive engagement.

The systems learn from each test to improve future predictions. AI can spot patterns in user behavior that humans might miss and adjust targeting accordingly.

We can deliver personalized ad experiences at scale by matching creative elements to individual user preferences and behaviors.

Limitations and Challenges of AI and Manual Methods

Both AI and manual testing methods face unique obstacles in ad testing that can impact their effectiveness and reliability. Each approach requires specific considerations to maximize success while managing potential drawbacks.

Maintenance and Ongoing Adaptation

AI testing tools need regular updates to handle new ad formats and platform changes. We’ve found that AI-powered testing systems require significant maintenance to stay current with evolving digital advertising standards.

Training data must be continuously refreshed to maintain accuracy. This process demands substantial time and resources.

Machine learning models can become less effective when ad platforms make major changes, requiring recalibration and sometimes complete retraining.

Quality Assurance and Usability Testing

Manual testing excels at catching subtle usability issues that AI might miss. We need human testers to evaluate the emotional impact and user experience of ads.

Traditional testing methods provide valuable insights into user behavior and engagement patterns that AI algorithms may not detect.

Quality assurance becomes more complex when combining both methods:

- Coordinating between AI and manual testing teams

- Reconciling conflicting test results

- Maintaining consistent testing standards

- Validating AI-generated recommendations

Test Case Coverage and Exploratory Testing

AI testing tools excel at repetitive tasks but may miss edge cases that human testers naturally discover through ad-hoc testing.

We’ve noticed that exploratory testing remains crucial for identifying unexpected issues and creative opportunities in ad campaigns.

Key testing coverage challenges:

- Handling dynamic ad content

- Testing across multiple devices and platforms

- Verifying personalization features

- Checking interactive ad elements

Unit testing automation requires careful balance between AI efficiency and manual oversight to ensure comprehensive coverage.

Hybrid Approaches: Combining AI with Manual Testing

Hybrid testing approaches blend AI automation with human expertise to create more effective ad testing workflows. This combination lets us maintain speed and efficiency while ensuring ads connect with real customer preferences.

Making the Most of Human Expertise

Human testers excel at evaluating subjective elements like emotional impact and brand alignment. We focus our manual testing on creative aspects that AI can’t fully grasp.

Creative professionals provide valuable insights about audience psychology and cultural nuances that shape ad performance. Their expertise helps identify subtle issues in messaging and design.

We use human judgment to set testing parameters and success metrics that align with business goals. This ensures automated tools work within meaningful boundaries.

Leveraging Automated Tools

AI testing tools rapidly analyze large amounts of ad variations and performance data. We can test hundreds of combinations in the time it would take to manually review just a few.

Smart testing platforms handle tasks like:

- Performance tracking across multiple channels

- Real-time budget optimization

- Audience segment analysis

- Click-through rate monitoring

The automation helps us quickly customize ads based on data while maintaining the human-approved creative direction. This creates a feedback loop between AI insights and manual refinements.

Best Practices for Optimizing Ad Testing Strategy

Effective ad testing requires careful planning and systematic execution to generate meaningful insights. Strategic targeting combined with thoughtful creative variations leads to the most valuable test results.

Audience Targeting and Personalization

A/B testing success starts with precise audience segmentation. We recommend testing ads with specific demographic groups, interests, and behaviors to understand what resonates with each segment.

Creating separate test groups based on customer journey stages helps measure impact at different touchpoints. For example, new visitors might respond differently to ads compared to returning customers.

We must ensure test groups are large enough for statistical significance but focused enough to deliver clear insights. A good rule is to maintain at least 1,000 users per test variation.

Optimizing Variations for Maximum Impact

Creative testing elements should focus on one variable at a time:

- Headlines and copy

- Visual elements (images, videos, colors)

- Call-to-action buttons

- Ad formats and placements

Test variations need clear differentiation while maintaining brand consistency. We recommend starting with bold changes rather than subtle tweaks to identify significant performance differences.

Keep test duration between 1-2 weeks to balance statistical confidence with the need for quick insights. This helps avoid ad fatigue while gathering enough data.

Frequently Asked Questions

AI-powered ad testing automates and optimizes the entire testing process while traditional manual methods require more hands-on work. These differences affect speed, accuracy, scalability, and insights.

What are the differences and benefits of AI-driven ad testing compared to traditional split A/B testing?

AI-driven testing can analyze multiple variables simultaneously while traditional methods test one change at a time.

AI testing learns and adapts in real-time based on performance data, making continuous improvements automatically. Manual split testing requires marketers to manually review results and make adjustments.

The AI approach reduces human bias in testing by using data-driven decisions rather than gut feelings or assumptions.

How do automated A/B testing tools compare to manual split testing in terms of accuracy and effectiveness?

Automated testing tools process much larger data sets more quickly and accurately than manual methods.

AI tools can detect subtle patterns and correlations that humans might miss during manual analysis.

The consistent application of testing parameters by AI systems leads to more reliable results compared to manual processes that may have inconsistencies.

What advantages do AI ad testing solutions offer over manual ad performance evaluation methods?

AI solutions can simultaneously test hundreds of ad variations across multiple platforms and audiences.

The systems provide real-time performance metrics and automatically adjust campaigns based on incoming data.

Machine learning algorithms can predict which ad elements will perform best before full testing is complete.

How do testing AI agents optimize the ad testing process beyond standard automation testing techniques?

AI agents use advanced pattern recognition to identify winning ad combinations faster than basic automated tools.

The systems learn from each test to improve future testing accuracy and efficiency.

Machine learning models can suggest new testing variations based on historical performance data.

In what ways can AI ad testing impact the scalability of testing compared to manual A/B testing methods?

AI testing platforms can handle exponentially more test variations without requiring additional human resources.

The automated nature of AI testing allows companies to expand testing across multiple markets and languages simultaneously.

What are the potential challenges when implementing AI for ad testing versus traditional manual testing approaches?

Initial setup costs and technical integration requirements can be higher for AI testing platforms.

Teams need training to properly interpret AI-generated insights and recommendations.

Some AI systems require large amounts of historical data to perform effectively, which new campaigns may lack.