Large language models are transforming how businesses approach digital marketing and user engagement. Using LLMs for ad creation has shown promising results in improving both click-through rates and conversion rates.

Companies that implement LLM-powered solutions can expect to see up to 30% improvement in their marketing campaign performance through personalized content and predictive analytics.

LLMs excel at processing user behavior data and generating targeted content that resonates with specific audience segments. By analyzing patterns in user interactions, these models can predict which content will perform best for different customer groups.

We’ve seen remarkable success when integrating LLMs into sales and marketing strategies, particularly in automating content creation and personalizing customer experiences at scale.

Key Takeaways

- LLMs can analyze user behavior patterns to predict and optimize click-through rates

- AI-powered content generation creates personalized marketing messages that convert better

- Automated optimization systems can scale marketing efforts while maintaining quality

Fundamentals of LLMs in CTR and Conversion Optimization

Large language models have transformed how we predict and optimize click-through rates and conversions by processing vast amounts of user interaction data and semantic patterns. These AI systems excel at understanding user intent and content relevance at scale.

How Large Language Models Work

Large language models analyze text patterns using neural networks trained on massive datasets. They process information through multiple layers to understand context and meaning.

We can think of LLMs as pattern recognition engines that map relationships between words, phrases, and concepts. These models use attention mechanisms to focus on relevant parts of input data.

The key strength of LLMs lies in their ability to understand natural language variations and user intent. This makes them valuable for matching user queries with relevant content.

Key Concepts: Click-Through Rate and Conversion Rate

Click-through rate prediction measures the percentage of users who click on specific content after viewing it. CTR helps us understand content effectiveness and user interest.

Conversion rate tracks the percentage of users who complete desired actions, like purchases or sign-ups. These metrics work together to measure campaign success.

LLMs enhance CTR prediction by analyzing:

- User behavior patterns

- Content relevance

- Semantic relationships

- Historical performance data

Relevance of Language Modeling to User Engagement

Semantic information plays a crucial role in shaping user decisions. LLMs process this information to better match content with user preferences and intent.

We use language modeling to:

- Analyze user search queries

- Understand content context

- Predict user interests

- Generate relevant recommendations

By processing both explicit and implicit signals, LLMs help create more engaging user experiences. They identify subtle patterns that traditional systems might miss.

The real-time processing capabilities of LLMs enable dynamic content optimization based on user behavior and preferences.

Personalization Strategies Using LLMs

LLMs analyze user behavior patterns and preferences to create custom experiences that boost engagement and sales. These AI systems process vast amounts of data to deliver highly relevant content and recommendations in real-time.

Adapting to User Preferences with Machine Learning

We’ve found that LLMs can learn and adapt to individual user behaviors by analyzing browsing patterns, purchase history, and interaction data.

The AI models identify key preferences through:

- Past purchase categories

- Time spent viewing specific items

- Click patterns and navigation flow

- Abandoned cart items

- Seasonal buying trends

This deep learning approach helps create dynamic user profiles that evolve over time. We can adjust content and offerings based on both explicit preferences and implicit behavioral signals.

Generating Tailored Product Descriptions

LLMs excel at creating unique product descriptions that resonate with different customer segments. Personalized communication increases engagement by 20%.

Key benefits of AI-generated descriptions:

- Tone adjustment based on customer demographics

- Dynamic pricing recommendations

- Feature highlighting based on user interests

- Multilingual content adaptation

- SEO-optimized variations

Enhancing Customer Interaction and Experience

When users feel understood, they show increased brand loyalty. LLMs enable real-time conversation adjustments and personalized support.

We implement these features for better engagement:

- 24/7 personalized chat support

- Custom product recommendations during conversations

- Contextual help based on user history

- Proactive issue resolution

- Sentiment-aware responses

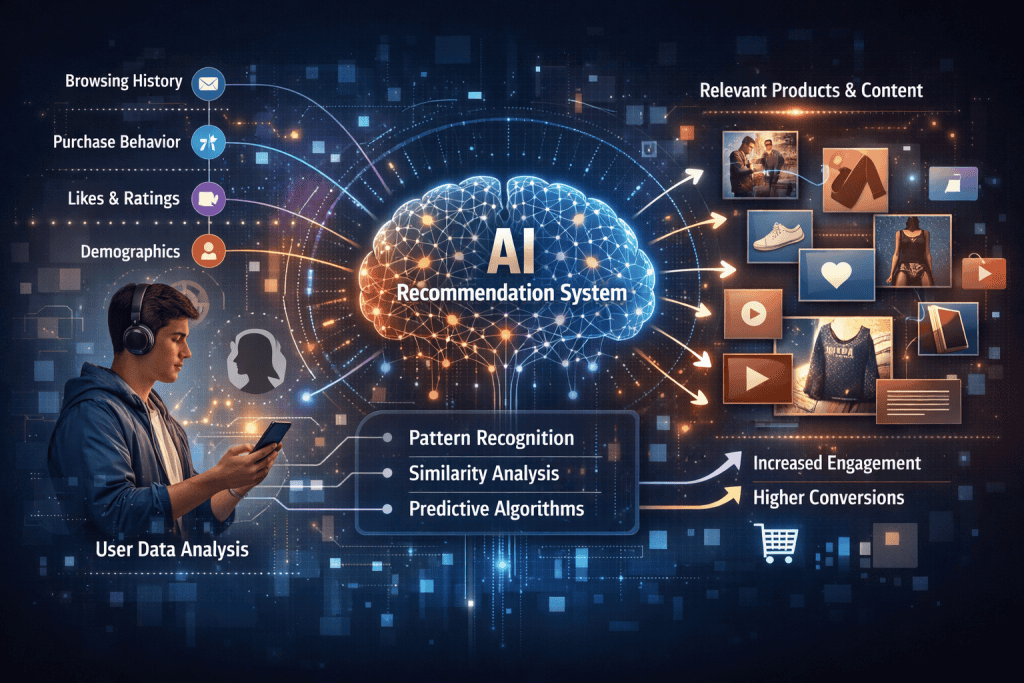

Utilizing Customer Data for Improved Recommendations

Our recommendation systems use LLMs to process customer data and create highly targeted suggestions. This leads to higher conversion rates through effective personalized offerings.

The AI analyzes multiple data points:

- Purchase history

- Browse behavior

- Customer demographics

- Similar user patterns

- Market trends

These insights help create precise product matches and boost sales through strategic cross-selling opportunities.

CTR Prediction and Ranking Models Powered by LLMs

Large language models are transforming click-through rate prediction by generating sophisticated explanations and capturing complex user-item interactions. These advances lead to more accurate predictions and better rankings for real-world applications.

LLM-Based Click-Through Rate Prediction

ExpCTR frameworks combine LLMs with traditional CTR models to generate meaningful explanations for predictions. This approach helps capture user intentions more effectively.

We’ve seen significant improvements in prediction accuracy when LLMs are used to model user-item feature interactions. The models excel at understanding subtle patterns in user behavior.

Recent research shows LLMs outperform conventional CTR prediction methods, though computational efficiency remains a challenge.

CTR Model Architectures

The most effective architectures use a three-stage training pipeline:

- Preference learning alignment

- Feature enhancement through LLM explanations

- Explanation refinement and consistency checks

Advanced CTR models now incorporate both user-item features and LLM-generated insights as input signals.

Key Components:

- Attention mechanisms for feature selection

- User preference modeling

- Real-time inference optimization

A/B Testing and Evaluation Metrics

We measure CTR model performance through rigorous A/B testing of different architectures and configurations. Common metrics include:

Primary Metrics:

- Click-through rate lift

- Conversion rate improvement

- Response time at scale

Efficient training approaches help balance accuracy with computational costs during testing phases.

Real-world deployment requires careful monitoring of both prediction accuracy and system latency. Regular A/B tests help validate model improvements across different user segments.

Advanced Recommendation Systems for Conversion Improvement

Modern recommendation systems use sophisticated AI techniques to match users with relevant products and content. These systems analyze patterns and similarities to predict what will drive conversions.

Embedding-Based Retrieval and Semantic Similarity

Advanced search and retrieval systems use embeddings to understand the meaning behind user queries. We convert text and product descriptions into numerical vectors that capture semantic relationships.

These vectors let us measure how similar items are to each other. When a user searches for “comfortable running shoes,” the system finds products with similar embedding patterns.

We enhance query understanding by mapping related terms and concepts. A search for “athletic footwear” will match listings for “sneakers” and “training shoes.”

User Embeddings and Behavioral Modeling

Continuous learning systems track user interactions to build detailed behavioral profiles. We analyze:

- Past purchases

- Browsing history

- Time spent viewing items

- Click patterns

- Cart additions

These signals help create rich user embeddings that capture preferences and intent. The more a user interacts, the more accurate their profile becomes.

Item Embeddings for Product Recommendations

Product embeddings capture key attributes and relationships between items. We look at:

- Visual features

- Category hierarchies

- Common purchase patterns

- Price points

- Brand relationships

Residual connections between these embedding layers improve CTR prediction accuracy. The system learns which product combinations drive higher conversion rates.

Real-time updates keep recommendations fresh as user preferences and market trends evolve. This dynamic approach maintains engagement and purchase rates.

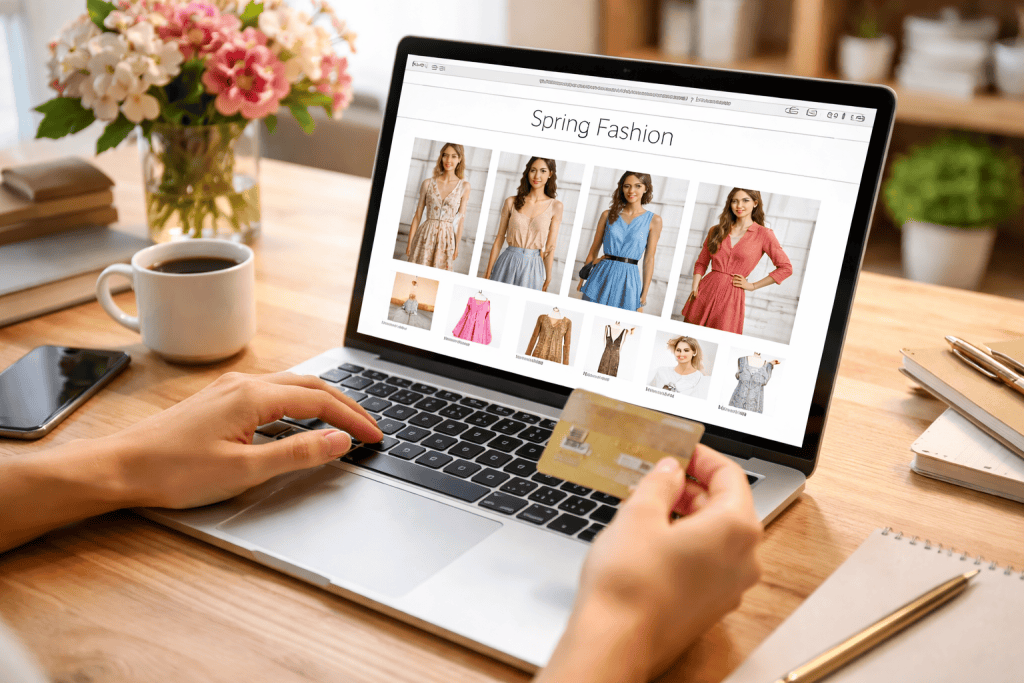

Application of LLMs in E-Commerce

LLMs have transformed how online stores connect with shoppers and sell products. These AI models help create better product descriptions and suggest items that customers might not find on their own.

Optimizing Content Understanding for Product Pages

Advanced natural language processing enables us to create detailed, accurate product descriptions that match how customers actually search.

We’ve seen that LLMs can analyze product features, specifications, and customer reviews to generate rich, informative content that helps buyers make decisions.

By using content-derived features, we can automatically tag and categorize products based on their descriptions, making them easier to find in search results.

Long-Tail Item Recommendations

Intelligent recommendation systems now help customers discover unique products they might never find through traditional browsing.

LLMs analyze shopping patterns and product relationships to suggest items from the less visible parts of an online store’s catalog.

We can boost sales of niche products by connecting them with the right customers through personalized recommendations. This helps reduce inventory of slow-moving items while increasing customer satisfaction.

These systems work especially well for specialty stores with large, diverse product catalogs where many valuable items might otherwise go unnoticed.

Model Architectures and Scalability Considerations

Modern LLM architectures offer powerful tools for enhancing click-through rates and conversions. These systems combine advanced transformer technology with scalable implementations to process and generate content that drives user engagement.

Transformer Models and GPT Variants

Large Language Models like GPT-3 and LLaMA use transformer architectures to understand user intent and generate compelling content. These models excel at creating personalized product descriptions and targeted ad copy.

We’ve found that GPT variants show particular strength in:

- Crafting dynamic call-to-action texts

- Personalizing product recommendations

- Optimizing ad headlines and descriptions

PaLM and newer transformer models introduce improved attention mechanisms that help better capture user preferences and browsing patterns.

Hybrid and Multimodal Architectures

Multi-modal architectures combine text and visual processing to create more engaging user experiences. The BeeFormer and M3CSR frameworks analyze both product images and descriptions to improve conversion rates.

Key components include:

- Modal encoders for processing images

- Transformer-based video encoders for rich media content

- Multi-task production ranking models for optimized recommendations

Addressing Scalability in Large-Scale Systems

Efficient scaling techniques are essential for maintaining performance as user traffic grows. We implement distributed training frameworks to handle increasing computational demands.

Critical scaling factors:

- GPU optimization for faster inference

- Load balancing across multiple servers

- Memory-efficient model deployment

The ELSA framework helps manage resource allocation while maintaining quick response times. We use parallel processing to ensure consistent performance even during high-traffic periods.

Data Generation, Training Paradigms, and Optimization Techniques

Modern LLMs enable powerful data generation and training approaches that directly impact CTR and conversion rates. Advanced techniques like masked modeling and contrastive learning help create high-quality synthetic data while maintaining robust model performance.

Adaptive Finetuning and Masked Language Modeling

Data augmentation using LLMs provides effective ways to generate synthetic training examples. We use masked language modeling to predict missing values in tabular data, which helps create realistic variations of existing conversion data.

The adaptive finetuning process involves:

- Gradually adjusting model parameters based on real user interaction patterns

- Fine-tuning on domain-specific CTR data

- Using masked tabular modeling to handle structured conversion data

Combining multiple modal encoders helps capture both textual and numerical features in CTR prediction tasks.

Contrastive Learning Approaches

Instance-level contrastive learning strengthens the model’s ability to distinguish between high and low-converting examples. We create positive and negative pairs of conversion scenarios to train the model.

Key benefits include:

- Better separation between converting and non-converting cases

- Improved feature representations for CTR prediction

- More robust performance across different user segments

The model learns to maximize distance between dissimilar examples while minimizing distance between similar ones.

Overfitting, Evaluation, and Generalization

Regular evaluation on held-out test sets helps prevent overfitting when training on synthetic data. We monitor key metrics like AUC-ROC and precision-recall curves throughout the training process.

Important evaluation strategies include:

- Cross-validation across different time periods

- Testing on multiple customer segments

- Comparing performance against baseline models

Learning paradigms for LLMs suggest using a mix of real and synthetic data provides better generalization than using either alone.

Real-World Applications and Use Cases

Companies using LLMs strategically have seen major gains in click-through rates and conversions. These AI tools excel at personalizing interactions and optimizing search results to match user intent.

Chatbots and Customer Support

LLM-powered chatbots handle customer inquiries 24/7 with personalized responses that sound natural and human-like. They understand context and can maintain consistent conversations.

When integrated with product catalogs and user data, these chatbots recommend items based on browsing history and past purchases. This targeted approach has helped businesses achieve up to 30% higher conversion rates.

Chatbots excel at qualifying leads by asking relevant questions and directing users to appropriate product pages or sales representatives. This pre-qualification process improves the quality of leads reaching human sales teams.

Job Matches and Search Optimization

Companies like Mercado Libre use LLMs to enhance their search functionality by understanding complex queries and matching them with relevant listings.

The AI analyzes job descriptions and candidate profiles to create better matches. This semantic understanding goes beyond simple keyword matching.

LLM-optimized job search platforms show:

- 40% faster candidate placement

- 25% higher application completion rates

- Better quality matches between skills and requirements

Search results become more relevant over time as the system learns from user interactions and click patterns. This continuous improvement helps maintain high conversion rates.

Architecture Components and Retrieval Methods

Modern retrieval systems combine efficient indexing with specialized neural architectures to deliver faster, more accurate results. The key components work together to balance computational costs with performance gains.

Approximate Nearest Neighbor Indices

Advanced search systems rely on ANN indices to quickly find similar items in large datasets. These indices organize dense content embeddings into clusters using k-means algorithms.

We can achieve up to 100x faster retrieval speeds compared to exact matching, with only minimal accuracy loss. The trade-off is worth it for most real-world applications.

RQ-VAE (Residual Quantization Variational Autoencoder) helps compress embeddings while preserving semantic meaning. This reduces storage needs and speeds up lookups.

Dual-Tower Architecture and Efficiency

The dual-tower approach processes queries and content separately through specialized neural networks. This split architecture enables pre-computation of content embeddings.

Key benefits include:

- Faster inference time

- Lower computational costs

- Better scaling for large datasets

We encode queries and content items independently, then compute similarity scores between their embeddings. This method lets us handle millions of items efficiently.

The architecture supports both sparse and dense representations, combining traditional keyword matching with semantic understanding.

Future Trends and Challenges in LLM-Based CTR and Conversion Strategies

AI-powered CTR prediction and conversion optimization are evolving rapidly. New advances in multimodal processing and semantic understanding will reshape how we target and engage users.

Unified Frameworks and Multimodal Integration

Large language models are becoming more efficient at processing longer sequences of user behavior data, enabling better prediction accuracy.

We see growing adoption of unified frameworks that combine text, image, and video data to understand user preferences holistically. These frameworks use multimodal content embeddings to capture rich cross-modal relationships.

Modality alignment pretraining helps LLMs understand connections between different content types. For example, matching product descriptions with images improves recommendation relevance.

New techniques for modality transformation allow seamless conversion between formats, making it easier to analyze mixed-media content engagement patterns.

Content-Driven and Semantic Recommendation Advances

Content-derived features provide deeper insight into why users engage with specific items. Semantic IDs replace traditional hash-based approaches for more meaningful content grouping.

Advanced LLM capabilities enable real-time analysis of content quality and relevance. This helps predict which items will drive higher engagement.

We can now map complex relationships between content pieces based on semantic similarity rather than just surface-level attributes.

Smart content tagging systems automatically extract key themes and topics, improving targeting precision.

Ethical Considerations and User Trust

Privacy-preserving techniques help balance personalization with data protection. We must carefully consider how we use sensitive user information.

Evaluation frameworks now assess both performance metrics and ethical implications of LLM-based targeting.

Clear disclosure about AI-driven recommendations builds user trust. Transparent explanations help users understand why specific content is shown to them.

Regular audits ensure recommendation systems remain fair and unbiased across different user segments.

Frequently Asked Questions

Language models deliver measurable improvements to advertising performance through better copy, personalization, and real-time optimization. They help create content that connects with target audiences while maintaining compliance with advertising standards.

How can language models be integrated into advertising copy to increase engagement?

Smart language models boost conversion rates by analyzing successful ad patterns and creating targeted messaging.

We can feed historical ad performance data into LLMs to generate copy variations that match proven engagement patterns.

The models learn from high-performing ads to create compelling headlines, descriptions, and calls-to-action that resonate with specific audience segments.

What are effective strategies for A/B testing ad copy generated by language models?

We recommend testing multiple LLM-generated variations against a control group to measure impact accurately.

Track metrics like CTR and conversion rates across different audience segments and platforms.

Test variations in tone, message length, and value propositions to identify what drives the best performance.

In what ways do language models assist in personalizing content for higher conversion rates?

LLMs analyze customer data to create personalized shopping experiences tailored to individual preferences.

The models can generate custom product descriptions, recommendations, and promotional offers based on past behavior.

Personalized discounts powered by LLMs increase conversion rates by 10-15% through more relevant offers.

Can language models predict and adapt to changing customer behaviors in real-time to improve CTR?

LLMs continuously analyze interaction patterns to identify shifts in customer preferences and behavior.

We can use these insights to automatically adjust messaging and offers in real-time.

The models detect common questions and objections to improve response relevance.

What is the role of natural language generation in creating scalable and relevant ad content?

LLMs enable rapid creation of unique ad variations for different platforms, audiences, and campaign goals.

The technology maintains consistent brand voice while adapting content to specific contexts and requirements.

How do language models ensure compliance with advertising standards and regulations?

We incorporate compliance rules and guidelines into the LLM training process.

The models can flag potential compliance issues and suggest compliant alternatives automatically.

Regular updates keep the models current with evolving advertising standards and requirements.